Ticket #846 (closed maintenance: fixed)

Load Spikes on BOA PuffinServer

| Reported by: | chris | Owned by: | chris |

|---|---|---|---|

| Priority: | major | Milestone: | Maintenance |

| Component: | Live server | Keywords: | |

| Cc: | ade, annesley, paul, sam, kate | Estimated Number of Hours: | 0.0 |

| Add Hours to Ticket: | 0 | Billable?: | yes |

| Total Hours: | 12.59 |

Description (last modified by chris) (diff)

Creating this as a ticket to record load spikes and related site outages.

See wiki:PuffinServer#LoadSpikes for links to historic issues of this nature.

Attachments

Change History

comment:1 Changed 20 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 0.0 to 0.25

comment:3 Changed 19 months ago by chris

There was a load spike just now:

Date: Sun, 19 Apr 2015 11:15:49 +0100 (BST) From: root@puffin.webarch.net To: chris@webarchitects.co.uk Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 45.80 Time: Sun Apr 19 11:15:44 2015 +0100 1 Min Load Avg: 75.88 5 Min Load Avg: 45.80 15 Min Load Avg: 23.44 Running/Total Processes: 32/518

I think this was high enough for the webserver to be stopped, I also got a pingdom and Nagios alert about it.

comment:4 Changed 19 months ago by chris

Another just now:

Date: Wed, 22 Apr 2015 10:49:37 +0100 (BST) From: root@puffin.webarch.net To: chris@webarchitects.co.uk Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 60.50 Time: Wed Apr 22 10:49:37 2015 +0100 1 Min Load Avg: 118.47 5 Min Load Avg: 60.50 15 Min Load Avg: 25.26 Running/Total Processes: 42/524

comment:5 Changed 19 months ago by chris

It seems to have passed now, it spiked a bit higher than the last comment:

Date: Wed, 22 Apr 2015 11:09:31 +0100 (BST) From: root@puffin.webarch.net To: chris@webarchitects.co.uk Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 80.03 Time: Wed Apr 22 11:09:30 2015 +0100 1 Min Load Avg: 121.18 5 Min Load Avg: 80.03 15 Min Load Avg: 44.00 Running/Total Processes: 57/532

comment:6 Changed 19 months ago by chris

The email this is a reply to should have updated this ticket: /trac/ticket/846 This is a test to try to work out why it didn't. On Thu 23-Apr-2015 at 10:43:51AM +0100, Ade Stuart wrote: > > The time frames for these peaks seem consistent. Is there a crone task > happening between 10.45 and 11.15? My starter for ten would be that > something is using up the system resource, and hence the spikes. > Thoughts?

comment:7 Changed 19 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 0.25 to 0.5

OK, weird, my email worked, I have updated the whitelist in /etc/email2trac.conf -- perhaps that is the cause... I did read ticket:494 and ticket:819 trying to find an answer to this...

Regarding the crontab on PuffinServer, perhaps, but there are not any specific ones at that time:

* * * * * bash /var/xdrago/second.sh >/dev/null 2>&1 * * * * * bash /var/xdrago/minute.sh >/dev/null 2>&1 * * * * * bash /var/xdrago/runner.sh >/dev/null 2>&1 * * * * * bash /var/xdrago/manage_ltd_users.sh >/dev/null 2>&1 03 * * * * bash /var/xdrago/clear.sh >/dev/null 2>&1 14 * * * * bash /var/xdrago/purge_binlogs.sh >/dev/null 2>&1 01 0 * * * bash /var/xdrago/graceful.sh >/dev/null 2>&1 08 0 * * * bash /var/xdrago/mysql_backup.sh >/dev/null 2>&1 08 2 * * * bash /opt/local/bin/backboa backup >/dev/null 2>&1 08 8 * * * bash /opt/local/bin/duobackboa backup >/dev/null 2>&1 58 2 * * * bash /var/xdrago/daily.sh >/dev/null 2>&1 58 5 * * * bash /var/xdrago/fetch-remote.sh >/dev/null 2>&1

comment:8 Changed 19 months ago by chris

Just had a massive load spike:

uptime 19:16:12 up 267 days, 5:59, 1 user, load average: 198.45, 175.53, 159.21

Seems to be recovering...

comment:9 Changed 19 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 0.5 to 0.75

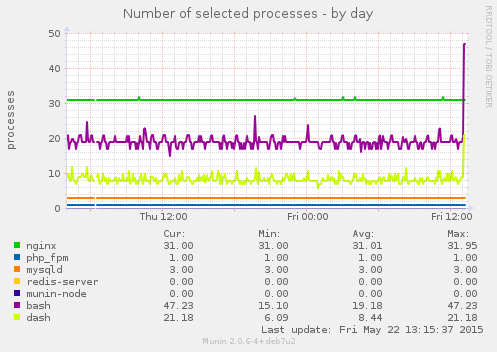

I did manage to ssh in (eventually) and get top to display what was going on just as the load was dropping off and it was bash and dash processes that were dominating so I have added dash and bash to this graph:

Note that the php5-fpm memory usage is very much up over the last few weeks.

I have also added the multip plugin to track the number of processes (same ones as for multips_memory):

[multips] env.names nginx php_fpm mysqld redis-server munin-node bash dash user root [multips_memory] env.names nginx php-fpm mysqld redis-server munin-node bash dash user root

BOA runs multiple bash and dash scripts all the time:

ps -lA | grep dash 4 S 0 9185 9175 1 80 0 - 1033 - ? 00:00:01 dash 4 S 0 9190 9174 3 80 0 - 1033 - ? 00:00:03 dash 4 S 0 11864 11861 0 80 0 - 1033 - ? 00:00:00 dash 4 S 0 11872 11865 0 80 0 - 1033 - ? 00:00:00 dash 4 S 0 11875 11866 0 80 0 - 1033 - ? 00:00:00 dash 4 S 0 58966 58959 0 80 0 - 1033 - ? 00:00:00 dash ps -lA | grep bash 0 S 0 11924 11875 0 80 0 - 2721 - ? 00:00:00 bash 4 S 0 15765 15759 0 80 0 - 2693 - ? 00:00:00 bash 0 S 0 15803 15764 0 80 0 - 2721 - ? 00:00:00 bash 0 S 0 15805 15762 0 80 0 - 2698 - ? 00:00:00 bash 0 S 1000 39464 39201 0 80 0 - 5060 - pts/0 00:00:06 bash 4 S 0 40513 40175 0 80 0 - 5217 - pts/0 00:00:15 bash 0 S 0 51716 1 2 80 0 - 3180 - ? 2-05:47:25 bash 0 S 0 51721 1 2 80 0 - 3180 - ? 2-05:22:41 bash 0 S 0 51726 1 2 80 0 - 3180 - ? 2-04:59:57 bash 0 S 0 51731 1 2 80 0 - 3180 - ? 2-05:05:06 bash 0 S 0 51736 1 2 80 0 - 3180 - ? 2-05:12:54 bash 4 S 0 58973 58966 0 80 0 - 2700 - ? 00:00:00 bash

I also removed the pure-ftpd server from this list as we are no longer running it.

PS this ticket was closed as a dupe: ticket:852

comment:10 Changed 18 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 0.75 to 1.0

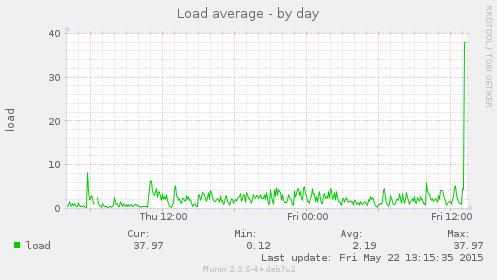

We just had a load spike:

Date: Fri, 22 May 2015 13:14:39 +0100 (BST) From: root@puffin.webarch.net To: chris@webarchitects.co.uk Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 41.22 Time: Fri May 22 13:14:39 2015 +0100 1 Min Load Avg: 74.26 5 Min Load Avg: 41.22 15 Min Load Avg: 18.13 Running/Total Processes: 37/509

And there was a corresponding spike in bash and dash processes (not sure if this was the cause or a consequence):

From looking at top I have added to the list of processes the multips graphs track:

[multips] env.names nginx php_fpm mysqld redis-server munin-node bash dash awk perl csf user root [multips_memory] env.names nginx php-fpm mysqld redis-server munin-node bash dash awk perl csf user root

See:

comment:11 Changed 18 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.18

- Total Hours changed from 1.0 to 1.18

We just had a rather large load spike, one of the email alerts:

Date: Tue, 2 Jun 2015 17:19:28 +0100 (BST) From: root@puffin.webarch.net To: chris@webarchitects.co.uk Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 151.07 Time: Tue Jun 2 17:19:27 2015 +0100 1 Min Load Avg: 165.73 5 Min Load Avg: 151.07 15 Min Load Avg: 94.10 Running/Total Processes: 122/655

And the BOA firewall script has tried to block the Webarchitects Nagios monitoring server:

Date: Tue, 2 Jun 2015 17:19:39 +0100 (BST) From: root@puffin.webarch.net To: chris@webarchitects.co.uk Subject: lfd on puffin.webarch.net: blocked 81.95.52.66 (GB/United Kingdom/nsa.rat.burntout.org) Time: Tue Jun 2 17:19:37 2015 +0100 IP: 81.95.52.66 (GB/United Kingdom/nsa.rat.burntout.org) Failures: 5 (sshd) Interval: 300 seconds Blocked: Permanent Block (IP match in csf.allow, block may not work) Log entries: Jun 2 17:19:02 puffin sshd[32711]: Did not receive identification string from 81.95.52.66 Jun 2 17:19:02 puffin sshd[32728]: Did not receive identification string from 81.95.52.66 Jun 2 17:19:03 puffin sshd[32756]: Did not receive identification string from 81.95.52.66 Jun 2 17:19:04 puffin sshd[310]: Did not receive identification string from 81.95.52.66 Jun 2 17:19:07 puffin sshd[413]: Did not receive identification string from 81.95.52.66

But it looks like it failed as the server is whitelisted.

I can't ssh to the server, the connection times out but I can access the XenShell however I don't have a password to hand as I always use ssh keys so I can't login.

So we could either wait for it to recover or I should shut it down and restart it, I'll wate 5 mins and see if it recovers...

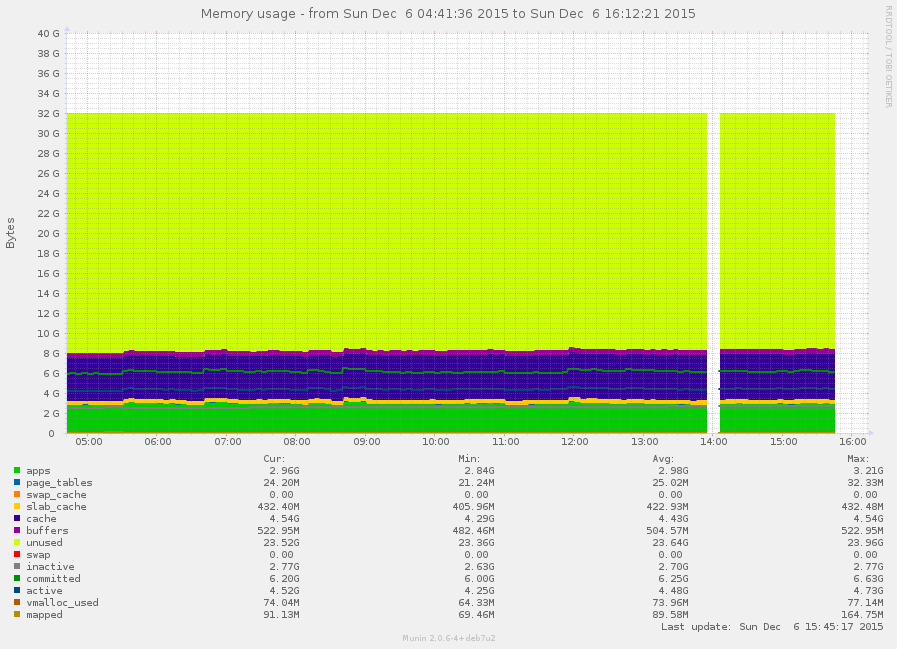

Note that the server load has been so hight that it hasn't been able to generate any Munin stats -- we simply have gap in the graphs:

comment:12 Changed 18 months ago by chris

Here is another of the emails, when this one was sent the 1 Min Load Avg was 180:

Date: Tue, 2 Jun 2015 17:21:48 +0100 (BST) From: root@puffin.webarch.net To: chris@webarchitects.co.uk Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 161.05 Time: Tue Jun 2 17:21:47 2015 +0100 1 Min Load Avg: 180.22 5 Min Load Avg: 161.05 15 Min Load Avg: 105.43 Running/Total Processes: 174/862

comment:13 Changed 18 months ago by chris

The website is producing 502 Bad Gateway errors, this means Nginx is up and running but it can't connect to php-fpm.

comment:14 Changed 18 months ago by chris

I'm going to shut it down and restart it.

comment:15 Changed 18 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.1

- Total Hours changed from 1.18 to 1.28

Running xm shutdown puffin.webarch.net wasn't resulting in the server being shutdown so I ran:

xm destroy puffin.webarch.net xm create puffin.webarch.net.cfg

And it's on it's way back up now.

comment:16 Changed 18 months ago by chris

It's up again.

comment:17 Changed 18 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 1.28 to 1.53

Not a very big load spike on PuffinServer but it must have got a lot higher:

Date: Thu, 4 Jun 2015 09:54:16 +0100 (BST) From: root@puffin.webarch.net To: chris@webarchitects.co.uk Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 14.22 Time: Thu Jun 4 09:52:36 2015 +0100 1 Min Load Avg: 39.15 5 Min Load Avg: 14.22 15 Min Load Avg: 5.67 Running/Total Processes: 47/430

It is was not responding to HTTP or SSH requests, but I'm now in and it is recovering:

uptime 10:12:11 up 1 day, 16:18, 1 user, load average: 89.40, 126.87, 103.35

Looking in /var/log/syslog it was a kernel issue and we have had this a few times before:

This is what we have in syslog:

Jun 4 09:49:50 puffin mysqld: 150604 9:49:50 [Warning] Aborted connection 294666 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 4 09:49:51 puffin mysqld: 150604 9:49:51 [Warning] Aborted connection 294667 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 4 09:49:51 puffin mysqld: 150604 9:49:51 [Warning] Aborted connection 294665 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 4 09:49:52 puffin mysqld: 150604 9:49:51 [Warning] Aborted connection 294664 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 4 09:50:03 puffin kernel: [143763.270448] INFO: task vnstatd:2101 blocked for more than 120 seconds. Jun 4 09:50:03 puffin kernel: [143763.270470] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. Jun 4 09:50:03 puffin kernel: [143763.270479] vnstatd D ffff8801fb30d4c0 0 2101 1 0x00000000 Jun 4 09:50:03 puffin kernel: [143763.270490] ffff8801fb30d4c0 0000000000000282 ffff8801ffc15000 ffff880000009680 Jun 4 09:50:03 puffin kernel: [143763.270503] ffff8801fd5a9d58 ffffffff81012cdb 000000000000f9e0 ffff8801fd5a9fd8 Jun 4 09:50:03 puffin kernel: [143763.270514] 00000000000157c0 00000000000157c0 ffff8801ff2a0710 ffff8801ff2a0a08 Jun 4 09:50:03 puffin kernel: [143763.270525] Call Trace: Jun 4 09:50:03 puffin kernel: [143763.270537] [<ffffffff81012cdb>] ? xen_hypervisor_callback+0x1b/0x20 Jun 4 09:50:03 puffin kernel: [143763.270548] [<ffffffff81155196>] ? cap_inode_permission+0x0/0x3 Jun 4 09:50:03 puffin kernel: [143763.270576] [<ffffffff8130e5c3>] ? __mutex_lock_common+0x122/0x192 Jun 4 09:50:03 puffin kernel: [143763.270587] [<ffffffff8130e6eb>] ? mutex_lock+0x1a/0x31 Jun 4 09:50:03 puffin kernel: [143763.270595] [<ffffffff810f7eac>] ? do_lookup+0x80/0x15d Jun 4 09:50:03 puffin kernel: [143763.270603] [<ffffffff810f86aa>] ? __link_path_walk+0x323/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.270611] [<ffffffff810f8caa>] ? path_walk+0x66/0xc9 Jun 4 09:50:03 puffin kernel: [143763.270619] [<ffffffff810fa114>] ? do_path_lookup+0x20/0x77 Jun 4 09:50:03 puffin kernel: [143763.270627] [<ffffffff810fa2a0>] ? do_filp_open+0xe5/0x94b Jun 4 09:50:03 puffin kernel: [143763.270635] [<ffffffff8130f054>] ? _spin_lock_irqsave+0x15/0x34 Jun 4 09:50:03 puffin kernel: [143763.270644] [<ffffffff81068fa6>] ? hrtimer_try_to_cancel+0x3a/0x43 Jun 4 09:50:03 puffin kernel: [143763.270653] [<ffffffff8102de30>] ? pvclock_clocksource_read+0x3a/0x8b Jun 4 09:50:03 puffin kernel: [143763.270662] [<ffffffff81103705>] ? alloc_fd+0x67/0x10c Jun 4 09:50:03 puffin kernel: [143763.270696] [<ffffffff810eeacf>] ? do_sys_open+0x55/0xfc Jun 4 09:50:03 puffin kernel: [143763.270705] [<ffffffff81011b42>] ? system_call_fastpath+0x16/0x1b Jun 4 09:50:03 puffin kernel: [143763.270763] INFO: task id:11812 blocked for more than 120 seconds. Jun 4 09:50:03 puffin kernel: [143763.270769] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. Jun 4 09:50:03 puffin kernel: [143763.270777] id D ffff8801fcd9b880 0 11812 11811 0x00000080 Jun 4 09:50:03 puffin kernel: [143763.270787] ffff8801fcd9b880 0000000000000286 ffff880002b27d88 ffff8801fedc1c20 Jun 4 09:50:03 puffin kernel: [143763.270798] 00000000000006fe ffffffff81012cdb 000000000000f9e0 ffff880002b27fd8 Jun 4 09:50:03 puffin kernel: [143763.270809] 00000000000157c0 00000000000157c0 ffff8801fabf3170 ffff8801fabf3468 Jun 4 09:50:03 puffin kernel: [143763.270821] Call Trace: Jun 4 09:50:03 puffin kernel: [143763.270827] [<ffffffff81012cdb>] ? xen_hypervisor_callback+0x1b/0x20 Jun 4 09:50:03 puffin kernel: [143763.270836] [<ffffffff81155196>] ? cap_inode_permission+0x0/0x3 Jun 4 09:50:03 puffin kernel: [143763.270845] [<ffffffff8130e5c3>] ? __mutex_lock_common+0x122/0x192 Jun 4 09:50:03 puffin kernel: [143763.270854] [<ffffffff8130e6eb>] ? mutex_lock+0x1a/0x31 Jun 4 09:50:03 puffin kernel: [143763.270861] [<ffffffff810f7eac>] ? do_lookup+0x80/0x15d Jun 4 09:50:03 puffin kernel: [143763.270869] [<ffffffff810f892c>] ? __link_path_walk+0x5a5/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.270876] [<ffffffff810f8caa>] ? path_walk+0x66/0xc9 Jun 4 09:50:03 puffin kernel: [143763.270884] [<ffffffff810fa114>] ? do_path_lookup+0x20/0x77 Jun 4 09:50:03 puffin kernel: [143763.270892] [<ffffffff810fa2a0>] ? do_filp_open+0xe5/0x94b Jun 4 09:50:03 puffin kernel: [143763.270901] [<ffffffff810d217e>] ? vma_link+0x74/0x9a Jun 4 09:50:03 puffin kernel: [143763.270908] [<ffffffff81103705>] ? alloc_fd+0x67/0x10c Jun 4 09:50:03 puffin kernel: [143763.270915] [<ffffffff810eeacf>] ? do_sys_open+0x55/0xfc Jun 4 09:50:03 puffin kernel: [143763.270923] [<ffffffff81011b42>] ? system_call_fastpath+0x16/0x1b Jun 4 09:50:03 puffin kernel: [143763.270931] INFO: task cron:11813 blocked for more than 120 seconds. Jun 4 09:50:03 puffin kernel: [143763.270937] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. Jun 4 09:50:03 puffin kernel: [143763.270945] cron D ffff8801fb1de2e0 0 11813 7882 0x00000080 Jun 4 09:50:03 puffin kernel: [143763.270954] ffff8801fb1de2e0 0000000000000282 0000000000000001 00000001810423b0 Jun 4 09:50:03 puffin kernel: [143763.270965] ffff88000bb357c0 0000000000000001 000000000000f9e0 ffff880002ab1fd8 Jun 4 09:50:03 puffin kernel: [143763.270976] 00000000000157c0 00000000000157c0 ffff8801ecdf2350 ffff8801ecdf2648 Jun 4 09:50:03 puffin kernel: [143763.270987] Call Trace: Jun 4 09:50:03 puffin kernel: [143763.270994] [<ffffffff811976a6>] ? vsnprintf+0x40a/0x449 Jun 4 09:50:03 puffin kernel: [143763.271002] [<ffffffff8130e5c3>] ? __mutex_lock_common+0x122/0x192 Jun 4 09:50:03 puffin kernel: [143763.271011] [<ffffffff8130e6eb>] ? mutex_lock+0x1a/0x31 Jun 4 09:50:03 puffin kernel: [143763.271018] [<ffffffff810f7eac>] ? do_lookup+0x80/0x15d Jun 4 09:50:03 puffin kernel: [143763.271025] [<ffffffff810f892c>] ? __link_path_walk+0x5a5/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.271033] [<ffffffff810f826a>] ? do_follow_link+0x1fa/0x317 Jun 4 09:50:03 puffin kernel: [143763.271041] [<ffffffff810f86e0>] ? __link_path_walk+0x359/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.271049] [<ffffffff810f8caa>] ? path_walk+0x66/0xc9 Jun 4 09:50:03 puffin kernel: [143763.271057] [<ffffffff810fa114>] ? do_path_lookup+0x20/0x77 Jun 4 09:50:03 puffin kernel: [143763.271064] [<ffffffff810fa2a0>] ? do_filp_open+0xe5/0x94b Jun 4 09:50:03 puffin kernel: [143763.271072] [<ffffffff81012cdb>] ? xen_hypervisor_callback+0x1b/0x20 Jun 4 09:50:03 puffin kernel: [143763.271081] [<ffffffff8130dda8>] ? thread_return+0x79/0xe0 Jun 4 09:50:03 puffin kernel: [143763.271089] [<ffffffff811901fb>] ? _atomic_dec_and_lock+0x33/0x50 Jun 4 09:50:03 puffin kernel: [143763.271097] [<ffffffff81103705>] ? alloc_fd+0x67/0x10c Jun 4 09:50:03 puffin kernel: [143763.271104] [<ffffffff810eeacf>] ? do_sys_open+0x55/0xfc Jun 4 09:50:03 puffin kernel: [143763.271111] [<ffffffff81011b42>] ? system_call_fastpath+0x16/0x1b Jun 4 09:50:03 puffin kernel: [143763.271119] INFO: task cron:11814 blocked for more than 120 seconds. Jun 4 09:50:03 puffin kernel: [143763.271125] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. Jun 4 09:50:03 puffin kernel: [143763.271133] cron D ffff8801fc48e2e0 0 11814 7882 0x00000080 Jun 4 09:50:03 puffin kernel: [143763.271142] ffff8801fc48e2e0 0000000000000286 0000000000000001 00000001810423b0 Jun 4 09:50:03 puffin kernel: [143763.271154] ffff88000bc617c0 000000000000000b 000000000000f9e0 ffff88007f0d7fd8 Jun 4 09:50:03 puffin kernel: [143763.271165] 00000000000157c0 00000000000157c0 ffff8801ecdf0710 ffff8801ecdf0a08 Jun 4 09:50:03 puffin kernel: [143763.271175] Call Trace: Jun 4 09:50:03 puffin kernel: [143763.271181] [<ffffffff811976a6>] ? vsnprintf+0x40a/0x449 Jun 4 09:50:03 puffin kernel: [143763.271189] [<ffffffff8130e5c3>] ? __mutex_lock_common+0x122/0x192 Jun 4 09:50:03 puffin kernel: [143763.271198] [<ffffffff8130e6eb>] ? mutex_lock+0x1a/0x31 Jun 4 09:50:03 puffin kernel: [143763.271205] [<ffffffff810f7eac>] ? do_lookup+0x80/0x15d Jun 4 09:50:03 puffin kernel: [143763.271213] [<ffffffff810f892c>] ? __link_path_walk+0x5a5/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.271221] [<ffffffff810f826a>] ? do_follow_link+0x1fa/0x317 Jun 4 09:50:03 puffin kernel: [143763.271229] [<ffffffff810f86e0>] ? __link_path_walk+0x359/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.271237] [<ffffffff810f8caa>] ? path_walk+0x66/0xc9 Jun 4 09:50:03 puffin kernel: [143763.271244] [<ffffffff810fa114>] ? do_path_lookup+0x20/0x77 Jun 4 09:50:03 puffin kernel: [143763.271252] [<ffffffff810fa2a0>] ? do_filp_open+0xe5/0x94b Jun 4 09:50:03 puffin kernel: [143763.271259] [<ffffffff81012cdb>] ? xen_hypervisor_callback+0x1b/0x20 Jun 4 09:50:03 puffin kernel: [143763.271268] [<ffffffff8130dda8>] ? thread_return+0x79/0xe0 Jun 4 09:50:03 puffin kernel: [143763.271275] [<ffffffff811901fb>] ? _atomic_dec_and_lock+0x33/0x50 Jun 4 09:50:03 puffin kernel: [143763.271283] [<ffffffff81103705>] ? alloc_fd+0x67/0x10c Jun 4 09:50:03 puffin kernel: [143763.271291] [<ffffffff810eeacf>] ? do_sys_open+0x55/0xfc Jun 4 09:50:03 puffin kernel: [143763.271298] [<ffffffff81011b42>] ? system_call_fastpath+0x16/0x1b Jun 4 09:50:03 puffin kernel: [143763.271305] INFO: task cron:11815 blocked for more than 120 seconds. Jun 4 09:50:03 puffin kernel: [143763.271311] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. Jun 4 09:50:03 puffin kernel: [143763.271319] cron D ffff8801fdfc8e20 0 11815 7882 0x00000080 Jun 4 09:50:03 puffin kernel: [143763.271328] ffff8801fdfc8e20 0000000000000286 0000000000000001 00000001810423b0 Jun 4 09:50:03 puffin kernel: [143763.271339] ffff88000bc617c0 000000000000000b 000000000000f9e0 ffff880002fa5fd8 Jun 4 09:50:03 puffin kernel: [143763.271350] 00000000000157c0 00000000000157c0 ffff8801ecdf7810 ffff8801ecdf7b08 Jun 4 09:50:03 puffin kernel: [143763.271362] Call Trace: Jun 4 09:50:03 puffin kernel: [143763.271368] [<ffffffff811976a6>] ? vsnprintf+0x40a/0x449 Jun 4 09:50:03 puffin kernel: [143763.271376] [<ffffffff8130e5c3>] ? __mutex_lock_common+0x122/0x192 Jun 4 09:50:03 puffin kernel: [143763.271385] [<ffffffff8130e6eb>] ? mutex_lock+0x1a/0x31 Jun 4 09:50:03 puffin kernel: [143763.271392] [<ffffffff810f7eac>] ? do_lookup+0x80/0x15d Jun 4 09:50:03 puffin kernel: [143763.271400] [<ffffffff810f892c>] ? __link_path_walk+0x5a5/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.271408] [<ffffffff810f826a>] ? do_follow_link+0x1fa/0x317 Jun 4 09:50:03 puffin kernel: [143763.271416] [<ffffffff810f86e0>] ? __link_path_walk+0x359/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.271423] [<ffffffff810f8caa>] ? path_walk+0x66/0xc9 Jun 4 09:50:03 puffin kernel: [143763.271431] [<ffffffff810fa114>] ? do_path_lookup+0x20/0x77 Jun 4 09:50:03 puffin kernel: [143763.271439] [<ffffffff810fa2a0>] ? do_filp_open+0xe5/0x94b Jun 4 09:50:03 puffin kernel: [143763.271446] [<ffffffff81012cdb>] ? xen_hypervisor_callback+0x1b/0x20 Jun 4 09:50:03 puffin kernel: [143763.271455] [<ffffffff8130dda8>] ? thread_return+0x79/0xe0 Jun 4 09:50:03 puffin kernel: [143763.271462] [<ffffffff811901fb>] ? _atomic_dec_and_lock+0x33/0x50 Jun 4 09:50:03 puffin kernel: [143763.271470] [<ffffffff81103705>] ? alloc_fd+0x67/0x10c Jun 4 09:50:03 puffin kernel: [143763.271478] [<ffffffff810eeacf>] ? do_sys_open+0x55/0xfc Jun 4 09:50:03 puffin kernel: [143763.271485] [<ffffffff81011b42>] ? system_call_fastpath+0x16/0x1b Jun 4 09:50:03 puffin kernel: [143763.271492] INFO: task cron:11816 blocked for more than 120 seconds. Jun 4 09:50:03 puffin kernel: [143763.271498] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. Jun 4 09:50:03 puffin kernel: [143763.271506] cron D ffff8801fdfcc6a0 0 11816 7882 0x00000080 Jun 4 09:50:03 puffin kernel: [143763.271516] ffff8801fdfcc6a0 0000000000000286 0000000000000001 00000001810423b0 Jun 4 09:50:03 puffin kernel: [143763.271527] ffff88000bb537c0 ffffffff81012cdb 000000000000f9e0 ffff88007d50ffd8 Jun 4 09:50:03 puffin kernel: [143763.271537] 00000000000157c0 00000000000157c0 ffff8801ecdf0e20 ffff8801ecdf1118 Jun 4 09:50:03 puffin kernel: [143763.271549] Call Trace: Jun 4 09:50:03 puffin kernel: [143763.271554] [<ffffffff81012cdb>] ? xen_hypervisor_callback+0x1b/0x20 Jun 4 09:50:03 puffin kernel: [143763.271563] [<ffffffff81155196>] ? cap_inode_permission+0x0/0x3 Jun 4 09:50:03 puffin kernel: [143763.271571] [<ffffffff8130e5c3>] ? __mutex_lock_common+0x122/0x192 Jun 4 09:50:03 puffin kernel: [143763.271580] [<ffffffff8130e6eb>] ? mutex_lock+0x1a/0x31 Jun 4 09:50:03 puffin kernel: [143763.271587] [<ffffffff810f7eac>] ? do_lookup+0x80/0x15d Jun 4 09:50:03 puffin kernel: [143763.271594] [<ffffffff810f892c>] ? __link_path_walk+0x5a5/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.271602] [<ffffffff810f826a>] ? do_follow_link+0x1fa/0x317 Jun 4 09:50:03 puffin kernel: [143763.271610] [<ffffffff810f86e0>] ? __link_path_walk+0x359/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.271618] [<ffffffff810f8caa>] ? path_walk+0x66/0xc9 Jun 4 09:50:03 puffin kernel: [143763.271625] [<ffffffff810fa114>] ? do_path_lookup+0x20/0x77 Jun 4 09:50:03 puffin kernel: [143763.271633] [<ffffffff810fa2a0>] ? do_filp_open+0xe5/0x94b Jun 4 09:50:03 puffin kernel: [143763.271641] [<ffffffff81012cdb>] ? xen_hypervisor_callback+0x1b/0x20 Jun 4 09:50:03 puffin kernel: [143763.271649] [<ffffffff8130dda8>] ? thread_return+0x79/0xe0 Jun 4 09:50:03 puffin kernel: [143763.271657] [<ffffffff811901fb>] ? _atomic_dec_and_lock+0x33/0x50 Jun 4 09:50:03 puffin kernel: [143763.271665] [<ffffffff81103705>] ? alloc_fd+0x67/0x10c Jun 4 09:50:03 puffin kernel: [143763.271672] [<ffffffff810eeacf>] ? do_sys_open+0x55/0xfc Jun 4 09:50:03 puffin kernel: [143763.271679] [<ffffffff81011b42>] ? system_call_fastpath+0x16/0x1b Jun 4 09:50:03 puffin kernel: [143763.271687] INFO: task cron:11817 blocked for more than 120 seconds. Jun 4 09:50:03 puffin kernel: [143763.271693] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. Jun 4 09:50:03 puffin kernel: [143763.271701] cron D ffff88007f04b880 0 11817 7882 0x00000080 Jun 4 09:50:03 puffin kernel: [143763.271710] ffff88007f04b880 0000000000000286 0000000000000001 00000001810423b0 Jun 4 09:50:03 puffin kernel: [143763.271721] ffff88000bc077c0 0000000000000008 000000000000f9e0 ffff88007d079fd8 Jun 4 09:50:03 puffin kernel: [143763.271732] 00000000000157c0 00000000000157c0 ffff8801ecdf62e0 ffff8801ecdf65d8 Jun 4 09:50:03 puffin kernel: [143763.271743] Call Trace: Jun 4 09:50:03 puffin kernel: [143763.271749] [<ffffffff811976a6>] ? vsnprintf+0x40a/0x449 Jun 4 09:50:03 puffin kernel: [143763.271757] [<ffffffff8130e5c3>] ? __mutex_lock_common+0x122/0x192 Jun 4 09:50:03 puffin kernel: [143763.271766] [<ffffffff8130e6eb>] ? mutex_lock+0x1a/0x31 Jun 4 09:50:03 puffin kernel: [143763.271773] [<ffffffff810f7eac>] ? do_lookup+0x80/0x15d Jun 4 09:50:03 puffin kernel: [143763.271780] [<ffffffff810f892c>] ? __link_path_walk+0x5a5/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.271789] [<ffffffff810f826a>] ? do_follow_link+0x1fa/0x317 Jun 4 09:50:03 puffin kernel: [143763.271796] [<ffffffff810f86e0>] ? __link_path_walk+0x359/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.271804] [<ffffffff810f8caa>] ? path_walk+0x66/0xc9 Jun 4 09:50:03 puffin kernel: [143763.271812] [<ffffffff810fa114>] ? do_path_lookup+0x20/0x77 Jun 4 09:50:03 puffin kernel: [143763.271819] [<ffffffff810fa2a0>] ? do_filp_open+0xe5/0x94b Jun 4 09:50:03 puffin kernel: [143763.271827] [<ffffffff81012cdb>] ? xen_hypervisor_callback+0x1b/0x20 Jun 4 09:50:03 puffin kernel: [143763.271836] [<ffffffff8130dda8>] ? thread_return+0x79/0xe0 Jun 4 09:50:03 puffin kernel: [143763.271843] [<ffffffff811901fb>] ? _atomic_dec_and_lock+0x33/0x50 Jun 4 09:50:03 puffin kernel: [143763.271851] [<ffffffff81103705>] ? alloc_fd+0x67/0x10c Jun 4 09:50:03 puffin kernel: [143763.271858] [<ffffffff810eeacf>] ? do_sys_open+0x55/0xfc Jun 4 09:50:03 puffin kernel: [143763.271865] [<ffffffff81011b42>] ? system_call_fastpath+0x16/0x1b Jun 4 09:50:03 puffin kernel: [143763.271873] INFO: task cron:11818 blocked for more than 120 seconds. Jun 4 09:50:03 puffin kernel: [143763.271879] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. Jun 4 09:50:03 puffin kernel: [143763.271886] cron D ffff8801ecdf7810 0 11818 7882 0x00000080 Jun 4 09:50:03 puffin kernel: [143763.271895] ffff8801ecdf7810 0000000000000282 0000000000000001 00000001810423b0 Jun 4 09:50:03 puffin kernel: [143763.271906] ffff88000bc7f7c0 000000000000000c 000000000000f9e0 ffff88007d0b3fd8 Jun 4 09:50:03 puffin kernel: [143763.271917] 00000000000157c0 00000000000157c0 ffff8801ecdf2a60 ffff8801ecdf2d58 Jun 4 09:50:03 puffin kernel: [143763.271928] Call Trace: Jun 4 09:50:03 puffin kernel: [143763.271934] [<ffffffff811976a6>] ? vsnprintf+0x40a/0x449 Jun 4 09:50:03 puffin kernel: [143763.271942] [<ffffffff8130e5c3>] ? __mutex_lock_common+0x122/0x192 Jun 4 09:50:03 puffin kernel: [143763.271950] [<ffffffff8130e6eb>] ? mutex_lock+0x1a/0x31 Jun 4 09:50:03 puffin kernel: [143763.271957] [<ffffffff810f7eac>] ? do_lookup+0x80/0x15d Jun 4 09:50:03 puffin kernel: [143763.271964] [<ffffffff810f892c>] ? __link_path_walk+0x5a5/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.271972] [<ffffffff810f826a>] ? do_follow_link+0x1fa/0x317 Jun 4 09:50:03 puffin kernel: [143763.271980] [<ffffffff810f86e0>] ? __link_path_walk+0x359/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.271988] [<ffffffff810f8caa>] ? path_walk+0x66/0xc9 Jun 4 09:50:03 puffin kernel: [143763.271995] [<ffffffff810fa114>] ? do_path_lookup+0x20/0x77 Jun 4 09:50:03 puffin kernel: [143763.272003] [<ffffffff810fa2a0>] ? do_filp_open+0xe5/0x94b Jun 4 09:50:03 puffin kernel: [143763.272011] [<ffffffff81012cdb>] ? xen_hypervisor_callback+0x1b/0x20 Jun 4 09:50:03 puffin kernel: [143763.482981] [<ffffffff8130dda8>] ? thread_return+0x79/0xe0 Jun 4 09:50:03 puffin kernel: [143763.482993] [<ffffffff811901fb>] ? _atomic_dec_and_lock+0x33/0x50 Jun 4 09:50:03 puffin kernel: [143763.483003] [<ffffffff81103705>] ? alloc_fd+0x67/0x10c Jun 4 09:50:03 puffin kernel: [143763.483012] [<ffffffff810eeacf>] ? do_sys_open+0x55/0xfc Jun 4 09:50:03 puffin kernel: [143763.483020] [<ffffffff81011b42>] ? system_call_fastpath+0x16/0x1b Jun 4 09:50:03 puffin kernel: [143763.483030] INFO: task id:11820 blocked for more than 120 seconds. Jun 4 09:50:03 puffin kernel: [143763.483037] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. Jun 4 09:50:03 puffin kernel: [143763.483045] id D ffff8801fabba350 0 11820 11819 0x00000080 Jun 4 09:50:03 puffin kernel: [143763.483055] ffff8801fabba350 0000000000000286 ffff88007d593d88 ffff8801fedc1c20 Jun 4 09:50:03 puffin kernel: [143763.483067] 00000000000006fe ffff880000009680 000000000000f9e0 ffff88007d593fd8 Jun 4 09:50:03 puffin kernel: [143763.483078] 00000000000157c0 00000000000157c0 ffff8801fabb8710 ffff8801fabb8a08 Jun 4 09:50:03 puffin kernel: [143763.483089] Call Trace: Jun 4 09:50:03 puffin kernel: [143763.483097] [<ffffffff810b9b8b>] ? zone_watermark_ok+0x20/0xb1 Jun 4 09:50:03 puffin kernel: [143763.483107] [<ffffffff8130e5c3>] ? __mutex_lock_common+0x122/0x192 Jun 4 09:50:03 puffin kernel: [143763.483115] [<ffffffff8130e6eb>] ? mutex_lock+0x1a/0x31 Jun 4 09:50:03 puffin kernel: [143763.483123] [<ffffffff810f7eac>] ? do_lookup+0x80/0x15d Jun 4 09:50:03 puffin kernel: [143763.483131] [<ffffffff810f892c>] ? __link_path_walk+0x5a5/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.483139] [<ffffffff810f8caa>] ? path_walk+0x66/0xc9 Jun 4 09:50:03 puffin kernel: [143763.483146] [<ffffffff810fa114>] ? do_path_lookup+0x20/0x77 Jun 4 09:50:03 puffin kernel: [143763.483154] [<ffffffff810fa2a0>] ? do_filp_open+0xe5/0x94b Jun 4 09:50:03 puffin kernel: [143763.483163] [<ffffffff810d217e>] ? vma_link+0x74/0x9a Jun 4 09:50:03 puffin kernel: [143763.483170] [<ffffffff81103705>] ? alloc_fd+0x67/0x10c Jun 4 09:50:03 puffin kernel: [143763.483178] [<ffffffff810eeacf>] ? do_sys_open+0x55/0xfc Jun 4 09:50:03 puffin kernel: [143763.483185] [<ffffffff81011b42>] ? system_call_fastpath+0x16/0x1b Jun 4 09:50:03 puffin kernel: [143763.483194] INFO: task awk:11827 blocked for more than 120 seconds. Jun 4 09:50:03 puffin kernel: [143763.483200] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. Jun 4 09:50:03 puffin kernel: [143763.483207] awk D ffff88019001a350 0 11827 11823 0x00000080 Jun 4 09:50:03 puffin kernel: [143763.483216] ffff88019001a350 0000000000000282 0000000000000000 0000000000000000 Jun 4 09:50:03 puffin kernel: [143763.483228] 0000000000000000 ffffffff81012cdb 000000000000f9e0 ffff88007f0d9fd8 Jun 4 09:50:03 puffin kernel: [143763.483239] 00000000000157c0 00000000000157c0 ffff8801fabb8000 ffff8801fabb82f8 Jun 4 09:50:03 puffin kernel: [143763.483249] Call Trace: Jun 4 09:50:03 puffin kernel: [143763.483256] [<ffffffff81012cdb>] ? xen_hypervisor_callback+0x1b/0x20 Jun 4 09:50:03 puffin kernel: [143763.483266] [<ffffffff81155196>] ? cap_inode_permission+0x0/0x3 Jun 4 09:50:03 puffin kernel: [143763.506896] [<ffffffff8130e5c3>] ? __mutex_lock_common+0x122/0x192 Jun 4 09:50:03 puffin kernel: [143763.506910] [<ffffffff8130e6eb>] ? mutex_lock+0x1a/0x31 Jun 4 09:50:03 puffin kernel: [143763.506919] [<ffffffff810f7eac>] ? do_lookup+0x80/0x15d Jun 4 09:50:03 puffin kernel: [143763.506927] [<ffffffff810f892c>] ? __link_path_walk+0x5a5/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.506935] [<ffffffff810f826a>] ? do_follow_link+0x1fa/0x317 Jun 4 09:50:03 puffin kernel: [143763.506943] [<ffffffff810f86e0>] ? __link_path_walk+0x359/0x6f5 Jun 4 09:50:03 puffin kernel: [143763.506952] [<ffffffff810f8caa>] ? path_walk+0x66/0xc9 Jun 4 09:50:03 puffin kernel: [143763.506960] [<ffffffff810fa114>] ? do_path_lookup+0x20/0x77 Jun 4 09:50:03 puffin kernel: [143763.506967] [<ffffffff810fa2a0>] ? do_filp_open+0xe5/0x94b Jun 4 09:50:03 puffin kernel: [143763.506975] [<ffffffff8100c4e8>] ? pte_pfn_to_mfn+0x21/0x30 Jun 4 09:50:03 puffin kernel: [143763.506983] [<ffffffff8100cc43>] ? xen_make_pte+0x7b/0x83 Jun 4 09:50:03 puffin kernel: [143763.506991] [<ffffffff8100e585>] ? xen_set_pte_at+0xa2/0xc2 Jun 4 09:50:03 puffin kernel: [143763.507000] [<ffffffff8105cd8e>] ? do_sigaction+0x159/0x171 Jun 4 09:50:03 puffin kernel: [143763.507008] [<ffffffff81103705>] ? alloc_fd+0x67/0x10c Jun 4 09:50:03 puffin kernel: [143763.507016] [<ffffffff810eeacf>] ? do_sys_open+0x55/0xfc Jun 4 09:50:03 puffin kernel: [143763.507023] [<ffffffff81011b42>] ? system_call_fastpath+0x16/0x1b

It has more-or-less recovered now:

uptime 10:20:55 up 1 day, 16:26, 1 user, load average: 2.21, 24.19, 60.46

I have reset the root passwords on all three servers so that I can login via the xen consol when needed.

comment:18 Changed 18 months ago by chris

The load alert emails didn't go out while the load was so high, they have come in now, this was the peak:

Date: Thu, 4 Jun 2015 10:08:33 +0100 (BST) From: root@puffin.webarch.net To: chris@webarchitects.co.uk Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 150.63 Time: Thu Jun 4 10:08:33 2015 +0100 1 Min Load Avg: 171.68 5 Min Load Avg: 150.63 15 Min Load Avg: 100.19 Running/Total Processes: 114/959

comment:19 Changed 18 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.05

- Total Hours changed from 1.53 to 1.58

Looks like another load spike now, uptime is not producing any results and I can't long via the xen console, I'm going to restart the server.

comment:20 Changed 18 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.03

- Total Hours changed from 1.58 to 1.61

It's on it's way back up now, the firewall is loading and this always takes ages.

comment:23 follow-up: ↓ 24 Changed 18 months ago by ade

Hi Chris, Do you have an understanding as to why this is happening? It seems that as well as the known issues, such as the the boa replication issues, the site going down is now becoming a regular issue, and we need to ascertain why this is the case. Your thoughts? best regards Ade On 4 June 2015 at 10:36, Transition Technology Trac < trac@tech.transitionnetwork.org> wrote: > #846: Load Spikes on BOA PuffinServer > -------------------------------------+------------------------------------- > Reporter: chris | Owner: chris > Type: maintenance | Status: new > Priority: major | Milestone: > Component: Live server | Maintenance > Keywords: | Resolution: > Add Hours to Ticket: 0.03 | Estimated Number of Hours: 0.0 > Total Hours: 1.58 | Billable?: 1 > -------------------------------------+------------------------------------- > Changes (by chris): > > * hours: 0.0 => 0.03 > * totalhours: 1.58 => 1.61 > > > Comment: > > It's on it's way back up now, the firewall is loading and tyhis always > takes '''ages'''. > > -- > Ticket URL: <https://tech.transitionnetwork.org/trac/ticket/846#comment:20 > > > Transition Technology <https://tech.transitionnetwork.org/trac> > Support and issues tracking for the Transition Network Web Project. > -- Ade Stuart Web Manager - Transition network 07595 331877 The Transition Network is a registered charity address: 43 Fore St, Totnes, Devon, TQ9 5HN, UK website: www.transitionnetwork.org TN company no: 6135675 TN charity no: 1128675

comment:24 in reply to: ↑ 23 Changed 18 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 1.61 to 1.86

Replying to ade:

Do you have an understanding as to why this is happening?

Not really, we have had a long history of load spikes with BOA but have never really managed to get to the bottom of it, probably because there is more than one cause, I could point out lots of past tickets on this if that would help?

Of course load spikes are not specific to BOA but BOA makes it really hard to work out what is going on and even harder to intervene to try to fix / mitigate things.

It seems that as well as the known issues, such as the the boa replication

issues, the site going down is now becoming a regular issue, and we need to

ascertain why this is the case.

Your thoughts?

Yes it would be good to know the cause(s) of these, but to be honest I don't know if we ever will -- we have spent a lot of time on the load spike suicides in the past... see PuffinServer#LoadSpikes and:

Personally I'd be more interested in spending time sorting out a non-BOA server than spending even more time trying understand what BOA is doing, however regarding this specific load spike and kernel issue it might not be BOA related -- there are lots of links related to "task blocked for more than 120 seconds xen":

Would you like me to spend some time looking into this?

Regarding the time the BOA firewall takes to load, mentioned in ticket:846#comment:20, I just timed how long it takes just to list the firewall rules, 30 mins (!!!) -- there rules are over 5k lines long:

date; iptables -L > /tmp/ip.txt ; date Thu Jun 4 10:44:57 BST 2015 Thu Jun 4 11:15:29 BST 2015 cat /tmp/ip.txt | wc -l 5239

comment:25 Changed 18 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 1.86 to 2.11

Just had another load spike on PuffinServer, list is a list of the load alert emails from ldf, Saturday, Sunday and today:

Jun 05 High 5 minute load average alert - 14.69 Jun 05 High 5 minute load average alert - 28.96 Jun 05 High 5 minute load average alert - 19.62 Jun 05 High 5 minute load average alert - 13.18 Jun 06 High 5 minute load average alert - 14.89 Jun 06 High 5 minute load average alert - 30.80 Jun 06 High 5 minute load average alert - 53.29 Jun 06 High 5 minute load average alert - 65.00 Jun 06 High 5 minute load average alert - 61.44 Jun 06 High 5 minute load average alert - 29.48 Jun 06 High 5 minute load average alert - 19.79 Jun 06 High 5 minute load average alert - 15.18 Jun 07 High 5 minute load average alert - 13.54 Jun 07 High 5 minute load average alert - 69.17 Jun 07 High 5 minute load average alert - 96.37 Jun 07 High 5 minute load average alert - 90.83 Jun 07 High 5 minute load average alert - 88.37 Jun 07 High 5 minute load average alert - 85.94 Jun 07 High 5 minute load average alert - 85.82 Jun 07 High 5 minute load average alert - 87.89 Jun 07 High 5 minute load average alert - 91.81 Jun 07 High 5 minute load average alert - 97.62 Jun 07 High 5 minute load average alert - 105.56 Jun 07 High 5 minute load average alert - 107.19 Jun 07 High 5 minute load average alert - 114.79 Jun 07 High 5 minute load average alert - 42.17 Jun 07 High 5 minute load average alert - 28.30 Jun 07 High 5 minute load average alert - 19.12 Jun 07 High 5 minute load average alert - 12.91 Jun 07 High 5 minute load average alert - 17.87 Jun 07 High 5 minute load average alert - 18.92 Jun 07 High 5 minute load average alert - 14.26 Jun 07 High 5 minute load average alert - 13.46 Jun 07 High 5 minute load average alert - 20.99 Jun 07 High 5 minute load average alert - 15.80 Jun 08 High 5 minute load average alert - 15.42 Jun 08 High 5 minute load average alert - 19.44 Jun 08 High 5 minute load average alert - 96.48 Jun 08 High 5 minute load average alert - 111.23 Jun 08 High 5 minute load average alert - 73.42 Jun 08 High 5 minute load average alert - 49.12 Jun 08 High 5 minute load average alert - 32.89

The peak of the latest one:

Date: Mon, 8 Jun 2015 10:37:52 +0100 (BST) From: root@puffin.webarch.net To: chris@webarchitects.co.uk Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 111.23 Time: Mon Jun 8 10:37:52 2015 +0100 1 Min Load Avg: 102.54 5 Min Load Avg: 111.23 15 Min Load Avg: 62.46 Running/Total Processes: 3/472

It has recovered now, I couldn't find anything in the logs.

comment:26 Changed 17 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 2.11 to 2.36

There was a load spike just after 4am this morning, this first ldf alert:

Date: Tue, 7 Jul 2015 03:55:46 +0100 (BST) From: root@puffin.webarch.net Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 13.07 Time: Tue Jul 7 03:54:54 2015 +0100 1 Min Load Avg: 38.25 5 Min Load Avg: 13.07 15 Min Load Avg: 5.17 Running/Total Processes: 52/458

The peak:

Date: Tue, 7 Jul 2015 04:09:28 +0100 (BST) From: root@puffin.webarch.net Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 94.54 Time: Tue Jul 7 04:09:22 2015 +0100 1 Min Load Avg: 94.67 5 Min Load Avg: 94.54 15 Min Load Avg: 66.86 Running/Total Processes: 64/685

And the last alert:

Date: Tue, 7 Jul 2015 04:21:59 +0100 (BST) From: root@puffin.webarch.net Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 12.87 Time: Tue Jul 7 04:21:59 2015 +0100 1 Min Load Avg: 1.04 5 Min Load Avg: 12.87 15 Min Load Avg: 36.69 Running/Total Processes: 1/376

There were a lot of 503 errors served during this time, almost all to bots, for example:

"66.249.92.63" www.transitionnetwork.org [07/Jul/2015:04:13:41 +0100] "GET /news/network/feed HTTP/1.1" 503 206 341 376 "-" "Feedfetcher-Google; (+http://www.google.com/feedfetcher.html; 2 subscribers; feed-id=12394840076319634974)" 0.000 "-" "207.46.13.110, 127.0.0.1" www.transitionnetwork.org [07/Jul/2015:04:13:45 +0100] "GET /initiatives/glastonbury HTTP/1.0" 503 206 406 371 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.001 "-" "66.249.92.60" www.transitionnetwork.org [07/Jul/2015:04:13:52 +0100] "GET /about HTTP/1.1" 503 206 243 376 "-" "Feedfetcher-Google; (+http://www.google.com/feedfetcher.html; 2 subscribers; feed-id=2875799361570896823)" 0.000 "-" "207.46.13.110" www.transitionnetwork.org [07/Jul/2015:04:13:55 +0100] "GET /blogs/rob-hopkins/2013-01-14/british-bean-back-interview-josiah-meldrum-hodmedod-and-transition HTTP/1.1" 503 206 343 376 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "65.19.138.34" www.transitionnetwork.org [07/Jul/2015:04:13:58 +0100] "GET /blogs/feed/rob-hopkins/atom/ HTTP/1.1" 503 206 399 371 "-" "Feedly/1.0 (+http://www.feedly.com/fetcher.html; like FeedFetcher-Google)" 0.142 "-" "207.46.13.110" www.transitionnetwork.org [07/Jul/2015:04:14:05 +0100] "GET /tags/colin-godmans-farm HTTP/1.1" 503 206 271 376 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "66.249.92.63" www.transitionnetwork.org [07/Jul/2015:04:14:07 +0100] "GET /blogs/feed HTTP/1.1" 503 206 334 376 "-" "Feedfetcher-Google; (+http://www.google.com/feedfetcher.html; 10 subscribers; feed-id=2865359885998846808)" 0.000 "-" "65.19.138.33" www.transitionnetwork.org [07/Jul/2015:04:14:12 +0100] "GET /blogs/feed HTTP/1.1" 503 206 339 371 "-" "Feedly/1.0 (+http://www.feedly.com/fetcher.html; like FeedFetcher-Google)" 0.177 "-" "207.46.13.110" www.transitionnetwork.org [07/Jul/2015:04:14:15 +0100] "GET /people/nyle-seabright HTTP/1.1" 503 206 269 376 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "66.249.78.181" www.transitionnetwork.org [07/Jul/2015:04:14:18 +0100] "GET /blogs/feed/rob-hopkins/ HTTP/1.1" 503 206 347 376 "-" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)" 0.000 "-" "207.46.13.110" www.transitionnetwork.org [07/Jul/2015:04:14:25 +0100] "GET /people/adam-walker1 HTTP/1.1" 503 206 267 376 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "94.228.34.249" www.transitionnetwork.org [07/Jul/2015:04:14:32 +0100] "GET /forums/themes/local-government HTTP/1.1" 503 206 321 376 "-" "magpie-crawler/1.1 (U; Linux amd64; en-GB; +http://www.brandwatch.net)" 0.000 "-" "207.46.13.110, 127.0.0.1" www.transitionnetwork.org [07/Jul/2015:04:14:35 +0100] "GET /initiatives/building-resilience-mission-brim HTTP/1.0" 503 206 427 371 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "66.249.92.63" www.transitionnetwork.org [07/Jul/2015:04:14:39 +0100] "GET /rss.xml HTTP/1.1" 503 206 245 376 "-" "Feedfetcher-Google; (+http://www.google.com/feedfetcher.html; 1 subscribers; feed-id=2525513441670857354)" 0.000 "-" "207.46.13.110" www.transitionnetwork.org [07/Jul/2015:04:14:45 +0100] "GET /tags/leicesershire HTTP/1.1" 503 206 266 376 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "185.2.129.62" www.transitionnetwork.org [07/Jul/2015:04:14:49 +0100] "GET /blogs/feed/rob-hopkins/ HTTP/1.1" 503 206 243 376 "http://transitionculture.org/?feed=rss2" "SimplePie/1.0 Beta (Feed Parser; http://www.simplepie.org/; Allow like Gecko) Build/20060129" 0.000 "-" "185.2.129.62" www.transitionnetwork.org [07/Jul/2015:04:14:49 +0100] "GET /blogs/feed/rob-hopkins/ HTTP/1.1" 503 206 243 376 "http://transitionculture.org/?feed=rss2" "SimplePie/1.0 Beta (Feed Parser; http://www.simplepie.org/; Allow like Gecko) Build/20060129" 0.000 "-" "66.249.92.57" www.transitionnetwork.org [07/Jul/2015:04:14:49 +0100] "GET /news/feed HTTP/1.1" 503 206 332 376 "-" "Feedfetcher-Google; (+http://www.google.com/feedfetcher.html; 4 subscribers; feed-id=7675538851756851077)" 0.000 "-" "207.46.13.110" www.transitionnetwork.org [07/Jul/2015:04:14:55 +0100] "GET /blogs/feed/rob-hopkins/ HTTP/1.1" 503 206 271 376 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "66.249.92.60" www.transitionnetwork.org [07/Jul/2015:04:15:00 +0100] "GET /blogs/feed/rob-hopkins/2013 HTTP/1.1" 503 206 265 376 "-" "Feedfetcher-Google; (+http://www.google.com/feedfetcher.html; 2 subscribers; feed-id=3110067181455394580)" 0.000 "-" "66.249.78.181" www.transitionnetwork.org [07/Jul/2015:04:15:02 +0100] "GET /sites/default/files/resize/remote/b7409b46d23f7dd65940714c8201cc07-92x100.jpg HTTP/1.1" 503 206 313 376 "-" "Googlebot-Image/1.0" 0.000 "-" "207.46.13.130" www.transitionnetwork.org [07/Jul/2015:04:15:05 +0100] "GET /user/login?destination=news/2013-12-16/bank-england-takes-note-local-money HTTP/1.1" 503 206 354 376 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "66.249.92.63" www.transitionnetwork.org [07/Jul/2015:04:15:10 +0100] "GET /news/network/feed HTTP/1.1" 503 206 341 376 "-" "Feedfetcher-Google; (+http://www.google.com/feedfetcher.html; 2 subscribers; feed-id=12394840076319634974)" 0.000 "-" "207.46.13.130" www.transitionnetwork.org [07/Jul/2015:04:15:05 +0100] "GET /user/login?destination=news/2013-12-16/bank-england-takes-note-local-money HTTP/1.1" 503 206 354 376 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "66.249.92.63" www.transitionnetwork.org [07/Jul/2015:04:15:10 +0100] "GET /news/network/feed HTTP/1.1" 503 206 341 376 "-" "Feedfetcher-Google; (+http://www.google.com/feedfetcher.html; 2 subscribers; feed-id=12394840076319634974)" 0.000 "-" "66.249.78.217, 127.0.0.1" www.transitionnetwork.org [07/Jul/2015:04:15:11 +0100] "GET /robots.txt HTTP/1.0" 503 206 401 371 "-" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)" 0.011 "-" "127.0.0.1" localhost [07/Jul/2015:04:15:14 +0100] "GET /robots.txt HTTP/1.1" 404 162 179 333 "-" "Wget/1.13.4 (linux-gnu)" 0.000 "-" "207.46.13.130" www.transitionnetwork.org [07/Jul/2015:04:15:15 +0100] "GET /tags/publications HTTP/1.1" 503 206 297 376 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "94.228.34.249" www.transitionnetwork.org [07/Jul/2015:04:15:15 +0100] "GET /forum/rss/forum/transitiongroup-food HTTP/1.1" 503 206 327 376 "-" "magpie-crawler/1.1 (U; Linux amd64; en-GB; +http://www.brandwatch.net)" 0.000 "-" "66.249.92.62, 127.0.0.1" www.transitionnetwork.org [07/Jul/2015:04:15:20 +0100] "GET /news/feed HTTP/1.0" 503 206 379 371 "-" "Feedfetcher-Google; (+http://www.google.com/feedfetcher.html; 1 subscribers; feed-id=730710629253691737)" 0.001 "-" "207.46.13.130" www.transitionnetwork.org [07/Jul/2015:04:15:25 +0100] "GET /tags/militarisation HTTP/1.1" 503 206 299 376 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "94.228.34.250" www.transitionnetwork.org [07/Jul/2015:04:15:25 +0100] "GET /forum/rss/tags/covered-market HTTP/1.1" 503 206 320 376 "-" "magpie-crawler/1.1 (U; Linux amd64; en-GB; +http://www.brandwatch.net)" 0.000 "-" "66.249.92.57" www.transitionnetwork.org [07/Jul/2015:04:15:30 +0100] "GET /blogs/feed HTTP/1.1" 503 206 334 376 "-" "Feedfetcher-Google; (+http://www.google.com/feedfetcher.html; 10 subscribers; feed-id=2865359885998846808)" 0.000 "-" "178.63.75.73" www.transitionnetwork.org [07/Jul/2015:04:15:31 +0100] "GET /taxonomy/term/998/all HTTP/1.0" 503 206 247 371 "-" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6 - James BOT - WebCrawler http://cognitiveseo.com/bot.html" 0.037 "-" "178.63.75.73" www.transitionnetwork.org [07/Jul/2015:04:15:33 +0100] "GET /people/lloyd-williams HTTP/1.0" 503 206 247 371 "-" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6 - James BOT - WebCrawler http://cognitiveseo.com/bot.html" 0.023 "-" "207.46.13.110" www.transitionnetwork.org [07/Jul/2015:04:15:35 +0100] "GET /blogs/rob-hopkins/2014-04/katrina-brown-resilience-impact-and-learning HTTP/1.1" 503 206 318 376 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "188.40.120.19" www.transitionnetwork.org [07/Jul/2015:04:15:35 +0100] "GET /forums/process/web-and-comms/free-crowdmapping-solution-web-mobile-apps HTTP/1.0" 503 206 297 371 "-" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6 - James BOT - WebCrawler http://cognitiveseo.com/bot.html" 0.023 "-" "144.76.100.237" www.transitionnetwork.org [07/Jul/2015:04:15:38 +0100] "GET /forums/process/web-and-comms/are-dedicated-servers-best-business HTTP/1.0" 503 206 290 371 "-" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6 - James BOT - WebCrawler http://cognitiveseo.com/bot.html" 0.023 "-" "136.243.16.102, 127.0.0.1" www.transitionnetwork.org [07/Jul/2015:04:15:42 +0100] "GET /forums/process/web-and-comms/eco-friendly-printing-and-print-manufacturers HTTP/1.0" 503 206 461 371 "-" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6 - James BOT - WebCrawler http://cognitiveseo.com/bot.html" 0.000 "-" "136.243.16.102, 127.0.0.1" www.transitionnetwork.org [07/Jul/2015:04:15:43 +0100] "GET /people/pravin-ganore HTTP/1.0" 503 206 407 371 "-" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6 - James BOT - WebCrawler http://cognitiveseo.com/bot.html" 0.001 "-" "136.243.16.102, 127.0.0.1" www.transitionnetwork.org [07/Jul/2015:04:15:44 +0100] "GET /taxonomy/term/999/0/feed HTTP/1.0" 503 206 411 371 "-" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6 - James BOT - WebCrawler http://cognitiveseo.com/bot.html" 0.001 "-" "136.243.16.102, 127.0.0.1" www.transitionnetwork.org [07/Jul/2015:04:15:44 +0100] "GET /people/neha-patel HTTP/1.0" 503 206 404 371 "-" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6 - James BOT - WebCrawler http://cognitiveseo.com/bot.html" 0.000 "-" "66.249.78.195" www.transitionnetwork.org [07/Jul/2015:04:15:44 +0100] "GET /sites/default/files/resize/remote/ceb9808fa3768e278199f7c502612729-550x366.jpg HTTP/1.1" 503 206 314 376 "-" "Googlebot-Image/1.0" 0.000 "-" "207.46.13.110" www.transitionnetwork.org [07/Jul/2015:04:15:45 +0100] "GET /people/janev-cameron HTTP/1.1" 503 206 268 376 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" 0.000 "-" "136.243.16.102, 127.0.0.1" www.transitionnetwork.org [07/Jul/2015:04:15:46 +0100] "GET /forums/process/web-and-comms/free-tool-creating-interactive-iphoneandroid-ebooks HTTP/1.0" 503 206 467 371 "-" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6 - James BOT - WebCrawler http://cognitiveseo.com/bot.html" 0.000 "-" "68.180.228.44" www.transitionnetwork.org [07/Jul/2015:04:15:47 +0100] "GET /tags/sustainable-bays HTTP/1.1" 503 206 288 376 "-" "Mozilla/5.0 (compatible; Yahoo! Slurp; http://help.yahoo.com/help/us/ysearch/slurp)" 0.000 "-" "151.80.31.122" booker-stage-20140501.transitionnetwork.org [07/Jul/2015:04:15:47 +0100] "GET /events/partner HTTP/1.1" 404 134 208 336 "-" "Mozilla/5.0 (compatible; AhrefsBot/5.0; +http://ahrefs.com/robot/)" 0.000 "1.32" "66.249.92.60" www.transitionnetwork.org [07/Jul/2015:04:15:49 +0100] "GET /news/feed HTTP/1.1" 503 206 332 376 "-" "Feedfetcher-Google; (+http://www.google.com/feedfetcher.html; 4 subscribers; feed-id=7675538851756851077)" 0.000 "-"

I don't know if all the bots caused the load spike or if they were a victim of the load spike.

Note there was a 404 request above for http://booker-stage-20140501.transitionnetwork.org/robots.txt -- the staging sites should have robots.txt files to ensure they are now crawled or indexed, however this site isn't a copy of the main site: http://booker-stage-20140501.transitionnetwork.org/ so I don't think there is a issue here.

comment:27 follow-up: ↓ 28 Changed 17 months ago by paul

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 2.36 to 2.61

That stage website has been removed a while back.

Do we have a Google Webmaster account for transitionnetwork.org website? It maybe worth investigating how to manually remove this page from the index.

The most recent stage site does have an appropriate robots.txt but not sure how this was achieved. Next time we build a platform I'll follow the notes in the wiki and check that the robots.txt file is correct.

https://booker-stage-20150319.transitionnetwork.org/robots.txt

User-agent: *

Disallow: /

Sitemap: http://www.transitionnetwork.org/sitemap.xml

comment:28 in reply to: ↑ 27 Changed 17 months ago by chris

Replying to paul:

That stage website has been removed a while back.

Right, I realised that half through writing it up in the comment above.

Do we have a Google Webmaster account for transitionnetwork.org website? It maybe worth investigating how to manually remove this page from the index.

Somebody should have one? Ask Sam perhaps?

The most recent stage site does have an appropriate robots.txt but not sure how this was achieved.

I think robots.txt files are created for staging sites but not for the default BOA splash pages for domains matching the wild card DNS, for example:

- https://foo.transitionnetwork.org/robots.txt

- https://bar.transitionnetwork.org/robots.txt

- https://baz.transitionnetwork.org/robots.txt

So I'd suggest this is an issue for BOA (the lack for robots.txt files), however as we appear to now have a policy to not apply BOA updates (see ticket:754#comment:60) there seems to be no point is raising an issue with them since even if they fix it the fix won't be appied to PuffinServer...?

There was another load spike just now, the peak:

Date: Tue, 7 Jul 2015 12:06:16 +0100 (BST) From: root@puffin.webarch.net Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 59.74 Time: Tue Jul 7 12:06:16 2015 +0100 1 Min Load Avg: 106.45 5 Min Load Avg: 59.74 15 Min Load Avg: 26.01 Running/Total Processes: 112/600

comment:29 Changed 17 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.1

- Total Hours changed from 2.61 to 2.71

Oops forgot to add time to the comment above.

comment:30 Changed 16 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.15

- Total Hours changed from 2.71 to 2.86

We are seeing very high load spikes on a daily basis on PuffinServer at the moment, these are the subject lines from some of the alert emails:

Jul 27 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 12.55 Jul 27 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 34.23 Jul 27 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 73.63 Jul 27 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 120.41 Jul 27 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 88.71 Jul 27 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 59.66 Jul 27 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 39.93 Jul 27 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 26.81 Jul 27 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 17.95 Jul 27 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 12.07 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 21.47 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 76.39 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 59.15 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 39.67 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 26.66 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 17.89 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 12.05 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 70.32 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 57.11 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 38.34 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 25.73 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 17.31 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 12.63 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 25.05 Jul 28 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 16.87 Jul 29 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 12.04 Jul 29 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 40.73 Jul 29 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 92.19 Jul 29 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 68.15 Jul 29 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 45.92 Jul 29 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 30.78 Jul 29 root@puffi lfd on puffin.webarch.net: High 5 minute load average alert - 21.26

The tops of these peaks are not picked up by Munin, see:

I'm not convinced that it is worth spending time on this issue any more, if anyone disagrees please let me know.

comment:31 Changed 15 months ago by chris

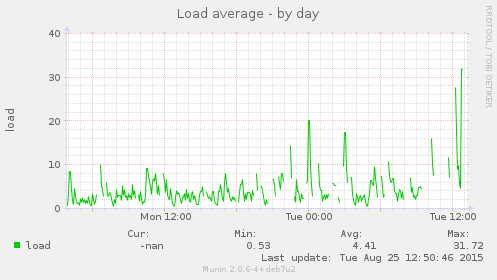

Big load spike today, I'll post some stats about it on this ticket.

comment:32 Changed 15 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 2.86 to 3.11

These are the subject lines from the ldf load alert emails from today, these are sent every 5 mins when the load is above 10 or so:

Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 15.84 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 26.13 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 17.56 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 14.28 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 32.92 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 30.15 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 20.27 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 17.02 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 13.57 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 14.04 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 31.83 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 38.05 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 26.59 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 19.76 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 13.28 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 16.31 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 21.37 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 14.40 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 14.99 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 22.18 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 15.10 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 12.05 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 13.05 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 33.59 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 46.81 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 33.24 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 22.31 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 15.13 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 13.46 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 12.23 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 32.07 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 57.67 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 44.66 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 30.17 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 20.29 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 13.73 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 12.51 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 23.35 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 20.75 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 13.97 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 13.99 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 29.44 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 32.96 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 15.04 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 12.95 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 13.63 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 14.32 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 31.23 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 70.98 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 78.94 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 77.84 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 86.56 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 106.85 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 112.84 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 105.13 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 93.73 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 97.14 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 100.15 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 104.10 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 101.91 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 97.32 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 30.86 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 19.46 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 16.40 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 30.10 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 38.24 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 35.38 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 23.76 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 19.16 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 12.97 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 14.08 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 31.02 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 53.04 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 56.32 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 55.31 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 78.01 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 76.02 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 61.12 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 40.95 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 16.53 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 16.12 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 12.48 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 32.67 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 31.74 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 21.30 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 14.62 Aug 25 lfd on puffin.webarch.net: High 5 minute load average alert - 31.64

The tops of these peaks haven't shown up in Munin as usual:

This was a, worse than usual, load spike but we get lots of these every day, it has always been the case with BOA, see wiki:PuffinServer#LoadSpikes, I don't know what can be done about it, given the current server architecture, other than putting up with them?

comment:33 Changed 15 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.5

- Total Hours changed from 3.11 to 3.61

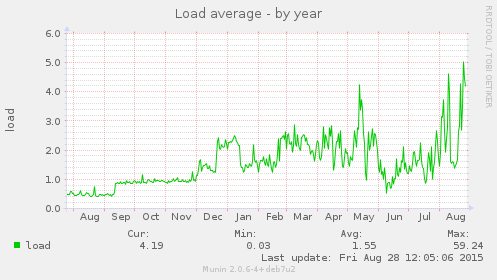

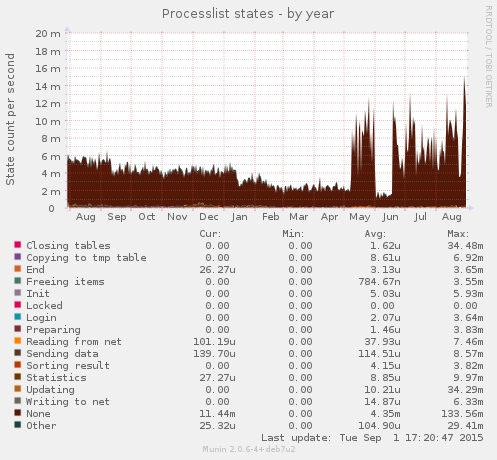

I have just spent some time looking through the Puffin Munin stats and this graph indicates the load increase on the server over the last year:

And the only other graph which has changed this much over the year is the PHP-FPM memory usage and this is basically related to the number of processes running (the process size hasn't changed much):

Note that a couple of years ago when trying to manually set the number of PHP-FPM processes to address BOA load spikes, we did have high and load values for this, for example:

- /trac/attachment/ticket/483/multips_memory-month.png

- /trac/attachment/ticket/555/puffin_2013-07-10_multips_memory-day.png

These examples were taken from a quick look through some of the wiki:PuffinServerBoaLoadSpikes#BOALoadSpikes tickets.

This autumn will mark the 3rd anniversary of the Transition Network using BOA as it's Drupal hosting platform, personally I'd be happy to move back to a system that we have more control over so we have a chance to find the cause of issues such as these load spikes and address them in an effective way.

We just had a big load spike while writing this comment and watching it in top there were lots of perl, sh and php processes running as the aegir user (the sites runs as tn.web) -- if we want to be able to do something about the downtime caused by all the load spikes we need a server we can control and with BOA one is not in control (this is the whole point of it...).

comment:34 Changed 15 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 3.61 to 3.86

I have been looking at the Munin stats for Puffin again, trying to work out why the server has been having so many load spikes, and these graphs are perhaps indicating something, these stats are from MySQL:

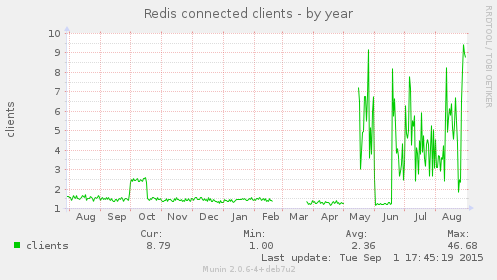

These from redis, which caches MySQL data:

Firewall connections:

And direction of the traffic:

This last graph seems to indicate that the cause is additional incoming connections.

comment:35 Changed 15 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.5

- Total Hours changed from 3.86 to 4.36

I have spent some time looking at the wiki:WebServerLogs#logstalgia graphical representation of the Nginx access.log (which is like watching pong!) and the things that were very noticeable:

- Huge number of requests for /?q=node/add, 8,867 today, 17,113 yesterday, 18,082 the day before.

- Huge number of requests for /user/register, 10,496 today, 19,898 yesterday, 20,907 the day before.

- Lot of requests for /people/kion-kashefi, 3,277 today, 6,291 yesterday, 6,451 the day before.

All I can conclude from this is that there are a huge number of spam bots out there... and this could be the cause of the change in behaviour of the site and why nothing shows up in the Piwik stats (as Piwik stats are from people not bots).

comment:36 Changed 15 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.43

- Total Hours changed from 4.36 to 4.79

Looking at yesterdays Nginx access.log.1 via wiki:WebServerLogs#goaccess there are a huge number of requests via Google proxies:

Volume No IP ------------------------------ 697.66 KB - 8075 66.249.93.187 694.56 KB - 7944 66.249.84.252 703.52 KB - 7916 66.249.93.179 690.28 KB - 7869 66.249.84.198 668.66 KB - 7810 66.249.84.194 734.59 KB - 7797 66.249.81.219 826.61 KB - 7773 66.249.81.225 673.94 KB - 7750 66.249.93.183 764.81 KB - 7700 66.249.81.222 374.77 KB - 4262 66.102.6.213 361.39 KB - 4146 66.102.6.205 350.12 KB - 4091 66.102.6.209

You can get the reverse proxy via:

- https://www.robtex.net/#!dns=66.249.93.187

- https://www.robtex.net/#!dns=66.249.84.252

- https://www.robtex.net/#!dns=66.249.93.179

- https://www.robtex.net/#!dns=66.249.84.198

- https://www.robtex.net/#!dns=66.249.84.194

- https://www.robtex.net/#!dns=66.249.81.219

- https://www.robtex.net/#!dns=66.249.81.225

- https://www.robtex.net/#!dns=66.249.93.183

- https://www.robtex.net/#!dns=66.249.81.222

- https://www.robtex.net/#!dns=66.102.6.213

- https://www.robtex.net/#!dns=66.102.6.205

- https://www.robtex.net/#!dns=66.102.6.209

This is what we have for the top requests by hits:

3.45 KB - 41616 /feeds/importer/transition_blogs_importer/15800

1.19 MB - 4524 /

288.78 MB - 3491 /blogs/feed/rob-hopkins/

78.69 MB - 2633 /news/feed

24.63 MB - 2019 /blogs/feed/rob-hopkins

1.71 MB - 1684 /robots.txt

I have no idea why there are over 41k requests for this blank page and these are all from Google -- Google is requesting this URL multiple times per second!

"66.249.84.252" www.transitionnetwork.org [01/Sep/2015:19:13:33 +0100] "GET /feeds/importer/transition_blogs_importer/15800 HTTP/1.1" 200 0 327 0 "-" "FeedFetcher-Google; (+http://www.google.com/feedfetcher.html)" 20.633 "-" "66.249.84.252" www.transitionnetwork.org [01/Sep/2015:19:13:33 +0100] "GET /feeds/importer/transition_blogs_importer/15800 HTTP/1.1" 200 0 327 0 "-" "FeedFetcher-Google; (+http://www.google.com/feedfetcher.html)" 20.514 "-" "66.249.93.187" www.transitionnetwork.org [01/Sep/2015:19:13:33 +0100] "GET /feeds/importer/transition_blogs_importer/15800 HTTP/1.1" 200 0 327 0 "-" "FeedFetcher-Google; (+http://www.google.com/feedfetcher.html)" 20.493 "-"

Run this command on the server to see, it's really crazy:

sudo -i tail -f /var/log/nginx/access.log | grep transition_blogs_importer

Some of the URL's are like this:

"66.249.93.187" www.transitionnetwork.org [01/Sep/2015:19:15:06 +0100] "GET /feeds/importer/transition_blogs_importer/15800?hub.topic=http://feeds.feedburner.com/TransitionVoice&hub.challenge=15765967025011620143&hub.verify_token=ab6f372cedf4209205adf1b9abb9315f&hub.mode=subscribe&hub.lease_seconds=432000 HTTP/1.1" 301 178 510 425 "-" "FeedFetcher-Google; (+http://www.google.com/feedfetcher.html)" 0.000 "-"

So... does anyone know anyone at Transition Voice? Perhaps they should be contected to see if they have an idea about this behaviour? Or should we contact Google?

I'm very tempted to block all these Google IP addresses via the firewall (I don't think we can do it at a Nginx level as BOA is running the server) to see if that improves the situation with the server... Anyone have any thoughts on this?

comment:37 Changed 15 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.1

- Total Hours changed from 4.79 to 4.89

I sent a (unencrypted) question via http://transitionvoice.com/contact-us/ and moments later all the Google proxy requests stopped... how weird is that!?

Only requests we now have from the Google subnet addresses are from the Googlebot, eg:

"66.249.75.150" www.transitionnetwork.org [01/Sep/2015:19:39:39 +0100] "GET /tags/newsletter?page=4 HTTP/1.1" 301 178 443 394 "-" "Mozilla/5.0 (iPhone; CPU iPhone OS 8_3 like Mac OS X) AppleWebKit/600.1.4 (KHTML, like Gecko) Version/8.0 Mobile/12F70 Safari/600.1.4 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)" 0.000 "-" "66.249.75.3, 127.0.0.1" www.transitionnetwork.org [01/Sep/2015:19:39:59 +0100] "GET /blogs/feed/rob-hopkins HTTP/1.0" 200 66986 392 67674 "-" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)" 0.024 "3.49" "66.249.75.150" www.transitionnetwork.org [01/Sep/2015:19:40:34 +0100] "GET /tags/newsletter HTTP/1.1" 200 9943 436 10656 "-" "Mozilla/5.0 (iPhone; CPU iPhone OS 8_3 like Mac OS X) AppleWebKit/600.1.4 (KHTML, like Gecko) Version/8.0 Mobile/12F70 Safari/600.1.4 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)" 16.405 "5.12" "66.249.75.11, 127.0.0.1" www.transitionnetwork.org [01/Sep/2015:19:40:38 +0100] "GET /tags/capital HTTP/1.0" 200 7163 444 7797 "-" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)" 0.586 "3.95" "66.249.75.168" www.transitionnetwork.org [01/Sep/2015:19:40:58 +0100] "GET /tags/tamzin-pinkerton HTTP/1.1" 200 7375 320 8088 "-" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)" 0.798 "3.93"

comment:38 Changed 15 months ago by chris

- Add Hours to Ticket changed from 0.0 to 0.1

- Total Hours changed from 4.89 to 4.99

In terms of registration attempts we are seeing 4-6k a day:

grep POST /var/log/nginx/access.log.1 | grep register | wc -l 4477 zgrep POST /var/log/nginx/access.log.2.gz | grep register | wc -l 4886 zgrep POST /var/log/nginx/access.log.3.gz | grep register | wc -l 5880 zgrep POST /var/log/nginx/access.log.4.gz | grep register | wc -l 4423

A vast number of them from this User Agent:

"Mozilla/5.0 (iPhone; CPU iPhone OS 8_1_2 like Mac OS X) AppleWebKit/600.1.4 (KHTML, like Gecko) CriOS/39.0.2171.50 Mobile/12B440 Safari/600.1.4"

But the vast nimber with this User Agent string are only from today -- I guess botnets randomise their UA strings:

grep CriOS /var/log/nginx/access.log | wc -l 17183 grep CriOS /var/log/nginx/access.log.1 | wc -l 5282 zgrep CriOS /var/log/nginx/access.log.2 | wc -l 8

My conclusion to all of this is that I suspect most the problems we have had with the load on the server recently has been caused by bots...

comment:39 Changed 15 months ago by chris

So the load spiked and low and behold loads of these requests again: