Ticket #677 (closed maintenance: fixed)

Spike in MyISAM (search) database activity, Redis unable to cache such requests

| Reported by: | chris | Owned by: | chris |

|---|---|---|---|

| Priority: | major | Milestone: | Maintenance |

| Component: | Live server | Keywords: | |

| Cc: | jim, planetlarg, ed, sam, aland | Estimated Number of Hours: | 0.0 |

| Add Hours to Ticket: | 0 | Billable?: | yes |

| Total Hours: | 2.82 |

Description (last modified by chris) (diff)

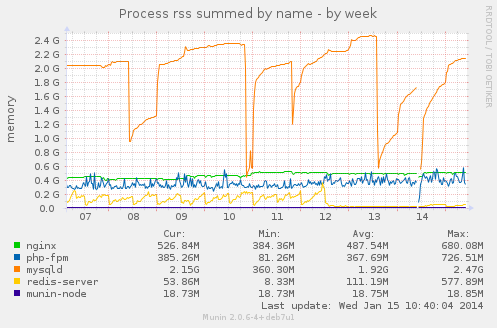

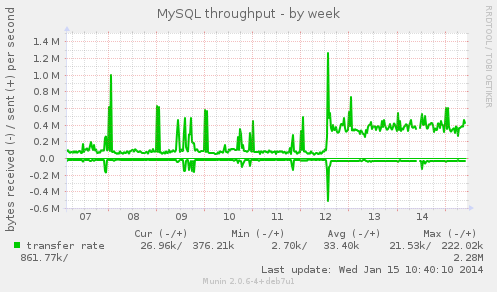

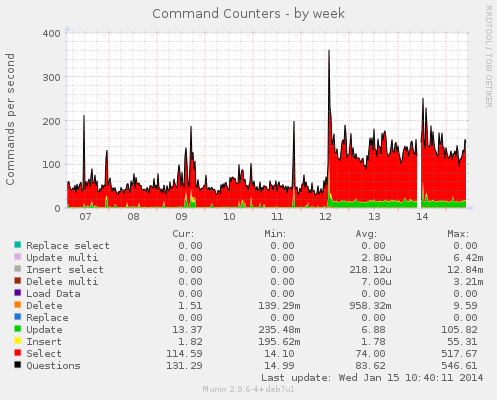

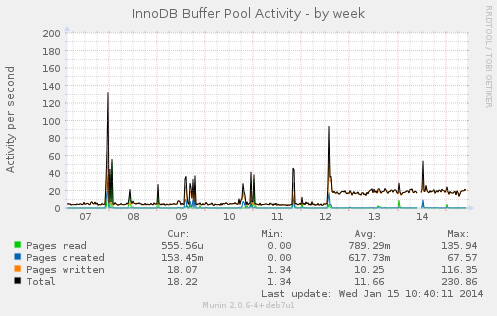

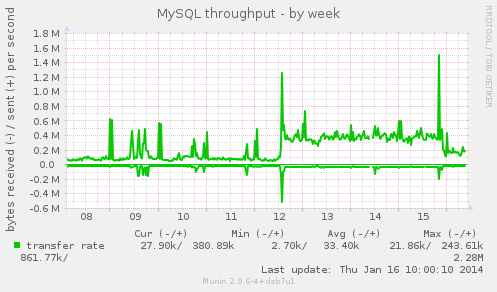

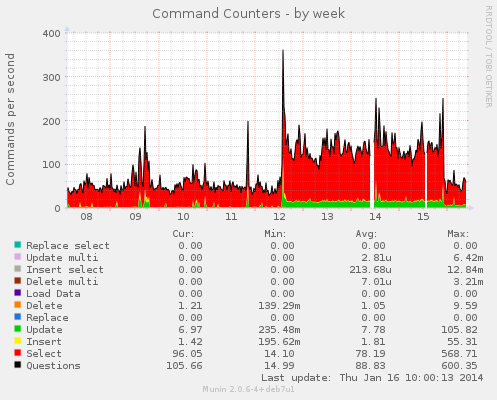

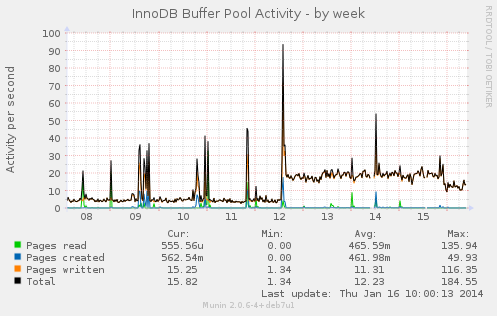

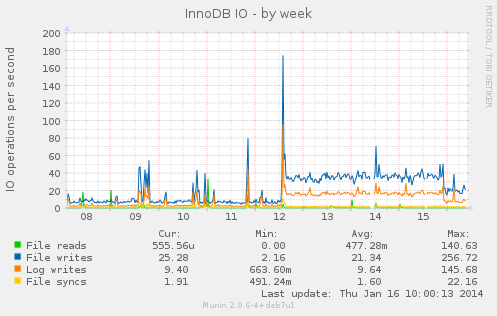

Since Sunday there has been a marked increase in MySQL activity and also a drop in Redis memory use, I suspect these things are related.

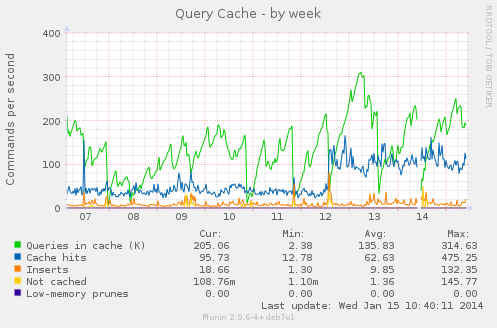

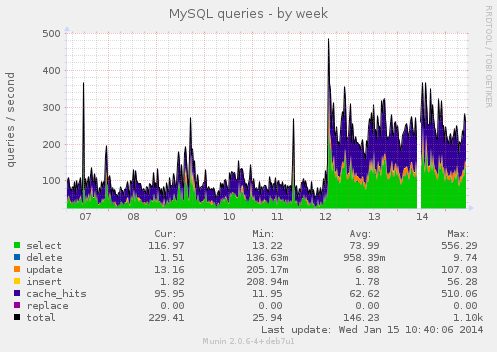

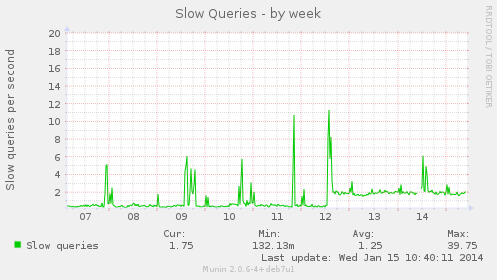

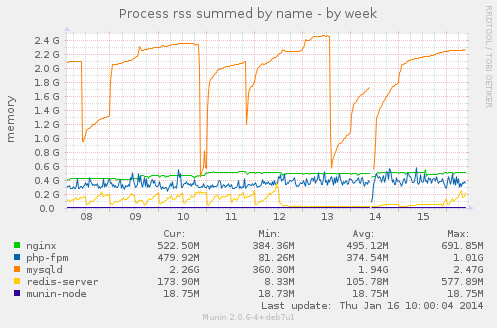

I'll post some Munin graphs below to illustrate this.

Attachments

Change History

comment:1 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 0.0 to 0.25

This image illustrates the drop in Redis memory use:

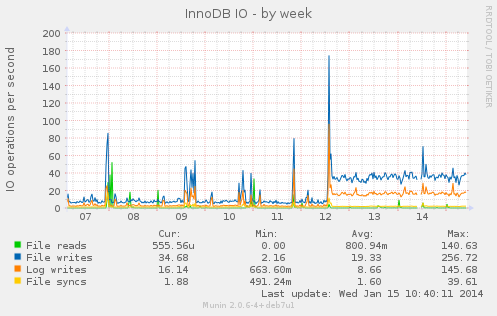

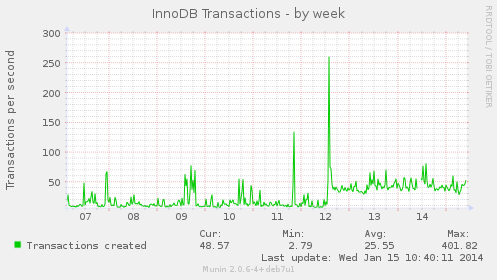

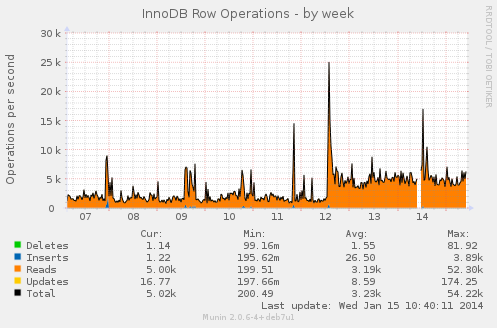

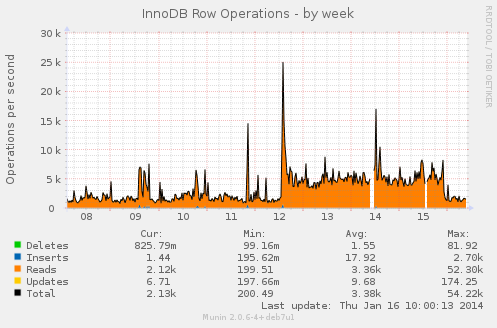

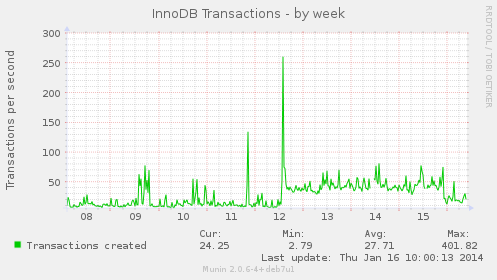

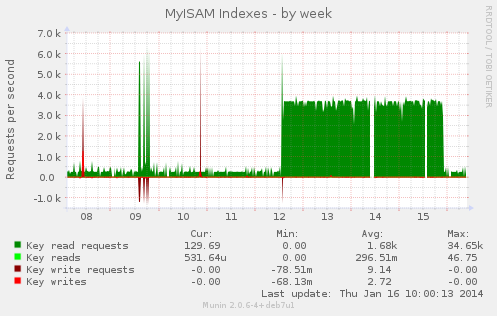

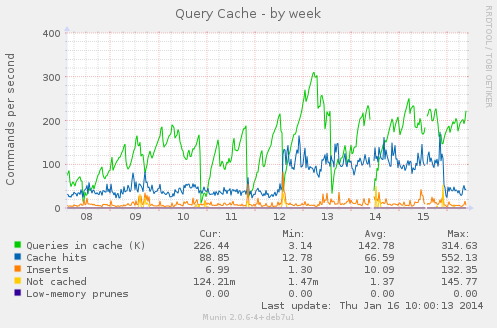

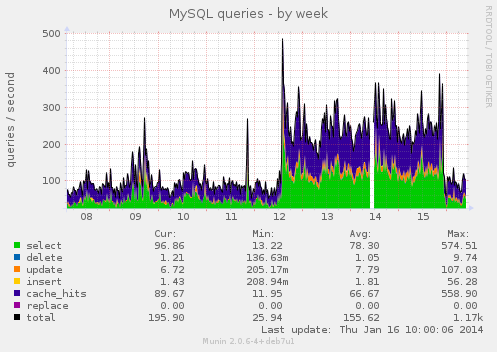

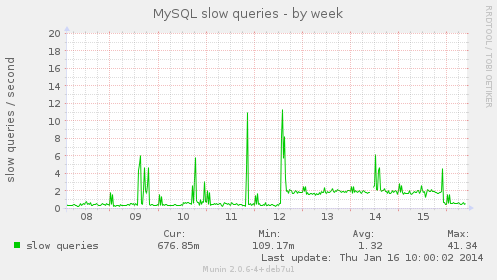

These graphs illustrate the increase in MySQL activity:

We don't have any Munin graphs for Redis, I'm going to see if this plugin works: https://github.com/bpineau/redis-munin

comment:2 Changed 3 years ago by chris

- Status changed from new to accepted

- Description modified (diff)

comment:3 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 0.25 to 0.5

Installing the Redis munin plugin from https://github.com/bpineau/redis-munin

cd /usr/local/share/munin/plugins/ wget https://raw.github.com/bpineau/redis-munin/master/redis_ chmod 755 redis_ cd /etc/munin/plugins/ ln -s /usr/local/share/munin/plugins/redis_ redis_127.0.0.1_6379

Test it:

munin-run redis_127.0.0.1_6379 multigraph redis_clients clients.value 3 multigraph redis_blocked_clients blocked.value 0 multigraph redis_memory memory.value 55500240 multigraph redis_fragmentation frag.value 1.11 multigraph redis_total_connections connections.value 41287 multigraph redis_expired_keys expired.value 65 multigraph redis_evicted_keys evicted.value 0 multigraph redis_pubsub_channels channels.value 0 multigraph redis_commands commands.value 1820777 hits.value 921236 misses.value 424794 multigraph redis_dbs db0keys.value 3203 db0expires.value 438 puffin:/etc/munin/plugins# munin-run redis_127.0.0.1_6379 multigraph redis_clients clients.value 2 multigraph redis_blocked_clients blocked.value 0 multigraph redis_memory memory.value 55721136 multigraph redis_fragmentation frag.value 1.10 multigraph redis_total_connections connections.value 41298 multigraph redis_expired_keys expired.value 65 multigraph redis_evicted_keys evicted.value 0 multigraph redis_pubsub_channels channels.value 0 multigraph redis_commands commands.value 1821217 hits.value 921524 misses.value 424870 multigraph redis_dbs db0keys.value 3265 db0expires.value 453

So soon we should have some Redis stats here:

I have updated the wiki with a link to this ticket: wiki:PuffinServer#MuninStats

The question I don't know the answer to is why was there a dramatic reduction of Redis MySQL caching on Sunday -- what changed and can we reverse that change? Does anyone have any idea?

comment:4 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.01

- Total Hours changed from 0.5 to 0.51

The only entry in the Trac Timeline for Sunday 2014-01-12 is Jim closing this ticket:640#comment:7

comment:5 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.1

- Total Hours changed from 0.51 to 0.61

The last time we had a dramatic drop in Redis memory use and also an increase on MySQL activity it was due to a missing Redis password, see ticket:554 however this isn't the case this time because no password is set in either /etc/redis/redis.conf or /data/conf/global.inc -- these passwords are commented out:

grep -i redis /data/conf/global.inc | grep pass //$conf['redis_client_password'] = '5m2NsYoF';

grep requirepass /etc/redis/redis.conf # If the master is password protected (using the "requirepass" configuration # requirepass 9r243rmI

comment:6 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.01

- Total Hours changed from 0.61 to 0.62

Zooming in on the Munin graphs the change in behaviour happened at 1:30pm on Sunday 2014-01-12.

comment:7 Changed 3 years ago by chris

- Cc aland added

- Add Hours to Ticket changed from 0.0 to 0.3

- Total Hours changed from 0.62 to 0.92

Looking at some Redis docs:

- http://redis.io/topics/admin

- http://redis.io/topics/problems

- http://redis.io/topics/latency

- http://redis.io/topics/memory-optimization

- http://redis.io/commands/info

Latency test:

redis-cli --latency -h 127.0.0.1 -p 6379 min: 0, max: 84, avg: 0.17 (40459 samples)^C

Following http://redis.io/topics/admin

If you are deploying using a virtual machine that uses the Xen hypervisor you may experience slow fork() times. This may block Redis from a few milliseconds up to a few seconds depending on the dataset size. Check the latency page for more information. This problem is not common to other hypervisors.

Which links to http://redis.io/topics/latency where these is a lot of info. Note that we are using Xen.

INFO:

redis-cli redis 127.0.0.1:6379> INFO # Server redis_version:2.6.16 redis_git_sha1:00000000 redis_git_dirty:0 redis_mode:standalone os:Linux 2.6.32-5-xen-amd64 x86_64 arch_bits:64 multiplexing_api:epoll gcc_version:4.7.2 process_id:30927 run_id:4725cded865a9f73032b22696abd1a6d8f405b6d tcp_port:6379 uptime_in_seconds:59145 uptime_in_days:0 hz:10 lru_clock:568341 # Clients connected_clients:1 client_longest_output_list:0 client_biggest_input_buf:0 blocked_clients:0 # Memory used_memory:55409072 used_memory_human:52.84M used_memory_rss:60973056 used_memory_peak:83923640 used_memory_peak_human:80.04M used_memory_lua:31744 mem_fragmentation_ratio:1.10 mem_allocator:jemalloc-3.2.0 # Persistence loading:0 rdb_changes_since_last_save:1779 rdb_bgsave_in_progress:0 rdb_last_save_time:1389803629 rdb_last_bgsave_status:ok rdb_last_bgsave_time_sec:0 rdb_current_bgsave_time_sec:-1 aof_enabled:0 aof_rewrite_in_progress:0 aof_rewrite_scheduled:0 aof_last_rewrite_time_sec:-1 aof_current_rewrite_time_sec:-1 aof_last_bgrewrite_status:ok # Stats total_connections_received:63496 total_commands_processed:2830397 instantaneous_ops_per_sec:0 rejected_connections:0 expired_keys:118 evicted_keys:0 keyspace_hits:1424102 keyspace_misses:646412 pubsub_channels:0 pubsub_patterns:0 latest_fork_usec:20161 # Replication role:master connected_slaves:0 # CPU used_cpu_sys:444.22 used_cpu_user:129.26 used_cpu_sys_children:10.20 used_cpu_user_children:40.63 # Keyspace db0:keys=3180,expires=571,avg_ttl=60771602

Latency time checked via:

redis-cli redis 127.0.0.1:6379> BGSAVE latest_fork_usec:18888

Not quite sure how this relates to the stats here: http://redis.io/topics/latency

It is suggested at http://redis.io/topics/admin that we should

Add vm.overcommit_memory = 1 to /etc/sysctl.conf and then reboot or run the command sysctl vm.overcommit_memory=1 for this to take effect immediately.

We don't have this set:

cat /etc/sysctl.conf | grep overcommit_memory vm.overcommit_memory = 0

It also suggests:

Make sure to setup some swap in your system (we suggest as much as swap as memory). If Linux does not have swap and your Redis instance accidentally consumes too much memory, either Redis will crash for out of memory or the Linux kernel OOM killer will kill the Redis process.

However we currently have swap disabled in an attempt to improve performance, it is commented out of fstab:

cat /etc/fstab | grep swap # /dev/xvda1 none swap sw 0 0

This is all very interesting but it doesn't explain why redis has stopped caching as much MySQL data...

Does anyone have a clue?

comment:8 Changed 3 years ago by jim

- Add Hours to Ticket changed from 0.0 to 0.75

- Total Hours changed from 0.92 to 1.67

Been out all day... OK, I've had a look around just done the following:

~$ cat .redis.pass.txt [password] ~$ grep pass /etc/redis/redis.conf # If the master is password protected (using the "requirepass" configuration # masterauth <master-password> # 150k passwords per second against a good box. This means that you should # use a very strong password otherwise it will be very easy to break. # requirepass [some old password]

So a) the 'requirepass' line was commented out, and b) the password in the commented line was wrong.

AFAIK, Redis ABSOLUTELY requires a password, and Drupal/Aegir? need to know it too!

I've uncommented the line and put the current password in from #~/.redis.pass.txt, then restarted the redis-server service. I've also uncommented the two lines Chris mentioned in comment:5.

Before I'd uncommented the global.inc ones, TN.org was slow as hell, so Redis must have been working. Once all uncommented (and hence sharing the right password), everything suddenly seems faster.

See this view from Munin demonstrating the spike in MySQL activity during me having a password mismatch...

OK, so Sherlock hat back on...

comment:9 Changed 3 years ago by jim

- Add Hours to Ticket changed from 0.0 to 0.25

- Priority changed from critical to minor

- Total Hours changed from 1.67 to 1.92

Except, according to the BOA changelog from 2.1.0 (2 Nov 2013):

Redis: Make redis_client_password optional and none by default

SOOOooo... I assume that the update script commented out those lines, and they are not necessary. The fact I've commented them back in and the system works fine shows us that either is ok, but not both at once.

But the key thing is Redis is working, and used by the websites -- without it the sites and server are seriously slower.

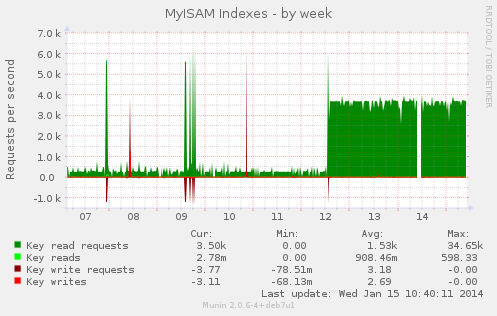

Moving on, I note this Munin chart of MyISAM indexes went up a lot at the same time... Which is funny, because we use InnoDB for our tables... EXCEPT the search tables...

Soo....

Going to the STG site's search index I see:

20% of the site has been indexed. There are 19001 items left to index.

AHA! This is it... The two sites STG and STG2 are the cause! They are re-indexing after I emptied the search_* tables as part of my work in #610.

When I come back from dinner in 20, I'll disable the search modules.

Downgrading, and relaxing!

comment:10 Changed 3 years ago by jim

- Priority changed from minor to major

- Summary changed from Redis has stopped caching MySQL data? to Spike in MyISAM (search) database activity, Redis unable to cache such requests

Fix for search issue, disable on STG:

[as TN Aegir user] tn@puffin:~$ drush @stg.transitionnetwork.org dis search -y The following extensions will be disabled: search Do you really want to continue? (y/n): y search was disabled successfully.

Did same for STG2 site... All future clones of these will inherit this status. I've updated the https://wiki.transitionnetwork.org/BOA_Server/Development_workflow#Cloning_PROD_to_STG and https://wiki.transitionnetwork.org/BOA_Server/Development_workflow#Copying_a_site_for_local_development documentation to include the disablig the core Search module.

BUT'''

I've checked and the cron isn't running on those site -- and the above chart is for MyISAM reads, not writes... So I've jumped to conclusion here.

Upgrading back to Major, and I've enabled the DBLog module on www site to see if we can get any extra info from Drupal.

But the cause of the MyISAM reads is unknown... Renaming this ticket as I think Redis is fine...

comment:11 follow-up: ↓ 24 Changed 3 years ago by jim

- Add Hours to Ticket changed from 0.0 to 0.4

- Total Hours changed from 1.92 to 2.32

Got it... I noticed MailChimp? in the DBlog saying it'd synced users every couple of minutes -- this should only happen every few hours...

So it's my bad: I missed adding Elysia Cron module (per [https://tech.transitionnetwork.org/trac/ticket/590#comment:34 my work on performance on #590) and so Cron was running every minute for EVERYTHING! This explains also why Redis' memory footprint was so low -- it was being cleared every minute!

I've just added elysia_cron to makefile. And downloaded/enabled it on PROD, so https://www.transitionnetwork.org/admin/build/cron is now showing all the right goodies.

Phew! Downgrading again, but this can be closed when Chris is happy -- though we might want to re-comment the Redis password per comment:8...

comment:23 Changed 3 years ago by chris

Nice one Jim, it all makes sense now. I don't think we need a redis password, it's set to only listen on 127.0.0.1 and it's not a shared server.

comment:24 in reply to: ↑ 11 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.5

- Status changed from accepted to closed

- Resolution set to fixed

- Total Hours changed from 2.32 to 2.82

Replying to jim:

we might want to re-comment the Redis password per comment:8...

I have commented out the lines with the passwd in /etc/redis/redis.conf and /data/conf/global.inc as I think Redis only needs to be password protected when it's either a shared server and/or redis is listening on external addresses. This has also caused the Redis Munin graphs to start working again:

I have created a Redis section on the wiki:PuffinServer#Redis wiki page.

I have attached the same graphs as above, comment:1 for reference, memory use:

MySQL activity:

I think this ticket is good to close, Jim, thanks foir your work on it last night.