Ticket #555 (closed maintenance: fixed)

Load spikes causing the TN site to be stopped for 15 min at a time

| Reported by: | chris | Owned by: | chris |

|---|---|---|---|

| Priority: | major | Milestone: | Maintenance |

| Component: | Live server | Keywords: | |

| Cc: | ed, jim, aland | Estimated Number of Hours: | 0.25 |

| Add Hours to Ticket: | 0 | Billable?: | yes |

| Total Hours: | 50.18 |

Description (last modified by chris) (diff)

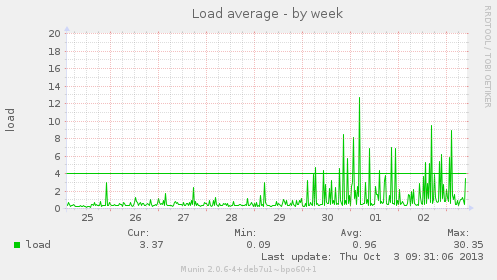

The BOA /var/xdrago/second.sh script is run every minute via the root crontab and if it detects a certain load level it changes the nginx config to a "high load" config which results in bots being served 503 errors when they spider the site, see ticket:563. When the load goes higher and hits another threshold the second.sh script kills the webserver applications, nginx and php-fpm, and waits till the load has dropped before starting them up again. This was happening once or twice a day following the increase in traffic around the launch of The Power of Just Doing Stuff. This has been addressed by multiplying the thresholds by 5 in second.sh.

Original Description

This morning at 10:19:24 I received the following alert from puffin:

Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 6.59 Time: Wed May 29 10:17:02 2013 +0100 1 Min Load Avg: 23.39 5 Min Load Avg: 6.59 15 Min Load Avg: 2.57 Running/Total Processes: 44/326

At 10:21:57 I got an alert regarding ssh:

Service: SSH Host: puffin Address: puffin.webarch.net State: CRITICAL Date/Time: Wed May 29 10:21:57 BST 2013 Additional Info: CRITICAL - Socket timeout after 10 seconds

Then at 10:26:47 ssh appeared to have recovered:

Service: SSH Host: puffin Address: puffin.webarch.net State: OK Date/Time: Wed May 29 10:26:47 BST 2013 Additional Info: SSH OK - OpenSSH_5.5p1 Debian-6+squeeze3 (protocol 2.0)

But then pingdom reported at 10:29:07:

www.transitionnetwork.org is down since 29/05/2013 10:24:57.

There was then a report regarding Nginx at 10:32:07:

Notification Type: PROBLEM Service: HTTP Host: puffin Address: puffin.webarch.net State: CRITICAL Date/Time: Wed May 29 10:32:07 BST 2013 Additional Info: Connection refused

So at 10:33:47 I ssh'd in and found that php53-fpm and nginx were not running and it took several attempts to get them running again.

The up email from pingdom reported:

www.transitionnetwork.org is UP again at 29/05/2013 10:36:57, after 12m of downtime.

I can't find anything in the logs to indicate what caused the load spike and php-fpm and nginx to stopp running.

Attachments

Change History

comment:1 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 0.0 to 0.25

comment:2 follow-up: ↓ 6 Changed 3 years ago by jim

Ran the same on my VPS and got:

May 21 04:20:47 babylon lfd[8020]: *LOAD* 5 minute load average is 11.32, threshold is 6 - email sent May 22 15:46:04 babylon lfd[18283]: *LOAD* 5 minute load average is 6.13, threshold is 6 - email sent May 23 04:18:57 babylon lfd[1874]: *LOAD* 5 minute load average is 14.04, threshold is 6 - email sent May 25 04:20:28 babylon lfd[17059]: *LOAD* 5 minute load average is 22.00, threshold is 6 - email sent May 27 04:19:07 babylon lfd[1056]: *LOAD* 5 minute load average is 8.93, threshold is 6 - email sent May 28 04:20:48 babylon lfd[1050]: *LOAD* 5 minute load average is 8.50, threshold is 6 - email sent May 29 04:20:20 babylon lfd[29153]: *LOAD* 5 minute load average is 6.95, threshold is 6 - email sent

Gmail had been auto-archiving me alerts so I missed these, but they are in my mailbox. Tweaked my filter to promote these now.

On Babylon (my system) it started on the 21st -- that does coincide with a system update for me (I left these a few weeks). What follows is a condensed set of entries from late 20th when I did a barracuda up-stable system, minus the stuff I've got that's extra like NewRelic? and Webmin:

grep "status installed" /var/log/dpkg.log ... 2013-05-20 22:23:02 status installed man-db 2.5.7-8 2013-05-20 22:23:02 status installed php5-common 5.3.25-1~dotdeb.0 2013-05-20 22:23:04 status installed php5-cli 5.3.25-1~dotdeb.0 2013-05-20 22:23:06 status installed php5-fpm 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-mysql 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-imap 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-ldap 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-geoip 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-xsl 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-mcrypt 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-curl 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-xmlrpc 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-sqlite 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-gd 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-apc 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-imagick 5.3.25-1~dotdeb.0 2013-05-20 22:23:07 status installed php5-gmp 5.3.25-1~dotdeb.0 2013-05-20 22:23:22 status installed linux-libc-dev 2.6.32-48squeeze3 2013-05-20 22:23:22 status installed php-pear 5.3.25-1~dotdeb.0 2013-05-20 22:23:22 status installed php5-dev 5.3.25-1~dotdeb.0 2013-05-20 22:23:23 status installed libxenstore3.0 4.0.1-5.11 ...

I'd bet my ass that one of the above is doing this.

Chris, when did the load spikes start for Puffin? And do you have any matching entries around that time?

My money is on an issue in PHP 5.3.25-1.

comment:3 Changed 3 years ago by jim

- Add Hours to Ticket changed from 0.0 to 0.5

- Total Hours changed from 0.25 to 0.75

comment:4 Changed 3 years ago by jim

The earlier version was php5-common 5.3.24-1~dotdeb.0, and that gave me not issues... Looking on https://bugs.php.net now.

comment:5 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 0.75 to 1.0

These are all the ones I have in my inbox:

Apr 22 lfd on puffin.webarch.net: High 5 minute load average alert - 6.01 Apr 22 lfd on puffin.webarch.net: High 5 minute load average alert - 6.02 Apr 23 lfd on puffin.webarch.net: High 5 minute load average alert - 7.22 Apr 24 lfd on puffin.webarch.net: High 5 minute load average alert - 8.59 Apr 25 lfd on puffin.webarch.net: High 5 minute load average alert - 6.35 Apr 26 lfd on puffin.webarch.net: High 5 minute load average alert - 9.26 Apr 28 lfd on puffin.webarch.net: High 5 minute load average alert - 7.39 Apr 28 lfd on puffin.webarch.net: High 5 minute load average alert - 16.03 Apr 28 lfd on puffin.webarch.net: High 5 minute load average alert - 6.97 Apr 29 lfd on puffin.webarch.net: High 5 minute load average alert - 7.67 Apr 29 lfd on puffin.webarch.net: High 5 minute load average alert - 64.21 Apr 30 lfd on puffin.webarch.net: High 5 minute load average alert - 7.49 May 01 lfd on puffin.webarch.net: High 5 minute load average alert - 6.69 May 03 lfd on puffin.webarch.net: High 5 minute load average alert - 6.09 May 03 lfd on puffin.webarch.net: High 5 minute load average alert - 7.62 May 04 lfd on puffin.webarch.net: High 5 minute load average alert - 6.04 May 05 lfd on puffin.webarch.net: High 5 minute load average alert - 6.04 May 06 lfd on puffin.webarch.net: High 5 minute load average alert - 6.67 May 07 lfd on puffin.webarch.net: High 5 minute load average alert - 6.75 May 07 lfd on puffin.webarch.net: High 5 minute load average alert - 7.40 May 08 lfd on puffin.webarch.net: High 5 minute load average alert - 7.21 May 10 lfd on puffin.webarch.net: High 5 minute load average alert - 9.86 May 10 lfd on puffin.webarch.net: High 5 minute load average alert - 6.02 May 11 lfd on puffin.webarch.net: High 5 minute load average alert - 12.52 May 11 lfd on puffin.webarch.net: High 5 minute load average alert - 7.30 May 11 lfd on puffin.webarch.net: High 5 minute load average alert - 6.60 May 11 lfd on puffin.webarch.net: High 5 minute load average alert - 9.22 May 12 lfd on puffin.webarch.net: High 5 minute load average alert - 10.70 May 12 lfd on puffin.webarch.net: High 5 minute load average alert - 7.26 May 13 lfd on puffin.webarch.net: High 5 minute load average alert - 6.54 May 13 lfd on puffin.webarch.net: High 5 minute load average alert - 10.77 May 14 lfd on puffin.webarch.net: High 5 minute load average alert - 8.79 May 14 lfd on puffin.webarch.net: High 5 minute load average alert - 7.96 May 14 lfd on puffin.webarch.net: High 5 minute load average alert - 9.26 May 16 lfd on puffin.webarch.net: High 5 minute load average alert - 10.61 May 17 lfd on puffin.webarch.net: High 5 minute load average alert - 6.02 May 17 lfd on puffin.webarch.net: High 5 minute load average alert - 6.16 May 18 lfd on puffin.webarch.net: High 5 minute load average alert - 7.40 May 20 lfd on puffin.webarch.net: High 5 minute load average alert - 16.78 May 20 lfd on puffin.webarch.net: High 5 minute load average alert - 16.78 May 20 lfd on puffin.webarch.net: High 5 minute load average alert - 16.78 May 20 lfd on puffin.webarch.net: High 5 minute load average alert - 16.78 May 21 lfd on puffin.webarch.net: High 5 minute load average alert - 6.89 May 22 lfd on puffin.webarch.net: High 5 minute load average alert - 12.08 May 23 lfd on puffin.webarch.net: High 5 minute load average alert - 12.14 May 23 lfd on puffin.webarch.net: High 5 minute load average alert - 6.51 May 23 lfd on puffin.webarch.net: High 5 minute load average alert - 7.20 May 24 lfd on puffin.webarch.net: High 5 minute load average alert - 9.52 May 24 lfd on puffin.webarch.net: High 5 minute load average alert - 8.13 May 24 lfd on puffin.webarch.net: High 5 minute load average alert - 6.18 May 24 lfd on puffin.webarch.net: High 5 minute load average alert - 6.16 May 24 lfd on puffin.webarch.net: High 5 minute load average alert - 7.13 May 25 lfd on puffin.webarch.net: High 5 minute load average alert - 8.23 May 25 lfd on puffin.webarch.net: High 5 minute load average alert - 7.03 May 25 lfd on puffin.webarch.net: High 5 minute load average alert - 6.70 May 25 lfd on puffin.webarch.net: High 5 minute load average alert - 7.16 May 25 lfd on puffin.webarch.net: High 5 minute load average alert - 10.29 May 25 lfd on puffin.webarch.net: High 5 minute load average alert - 10.04 May 25 lfd on puffin.webarch.net: High 5 minute load average alert - 8.82 May 25 lfd on puffin.webarch.net: High 5 minute load average alert - 9.22 May 25 lfd on puffin.webarch.net: High 5 minute load average alert - 7.43 May 26 lfd on puffin.webarch.net: High 5 minute load average alert - 8.12 May 27 lfd on puffin.webarch.net: High 5 minute load average alert - 6.43 May 27 lfd on puffin.webarch.net: High 5 minute load average alert - 7.20 May 27 lfd on puffin.webarch.net: High 5 minute load average alert - 6.25 May 27 lfd on puffin.webarch.net: High 5 minute load average alert - 6.67 May 27 lfd on puffin.webarch.net: High 5 minute load average alert - 9.81 May 27 lfd on puffin.webarch.net: High 5 minute load average alert - 6.47 May 28 lfd on puffin.webarch.net: High 5 minute load average alert - 10.78 May 28 lfd on puffin.webarch.net: High 5 minute load average alert - 6.83 May 28 lfd on puffin.webarch.net: High 5 minute load average alert - 6.58 May 28 lfd on puffin.webarch.net: High 5 minute load average alert - 6.01 May 29 lfd on puffin.webarch.net: High 5 minute load average alert - 6.59

I'm not sure if there were any prior to this, there might have been and I deleted them or there might not have been any, in any case it's been happening since late April at least.

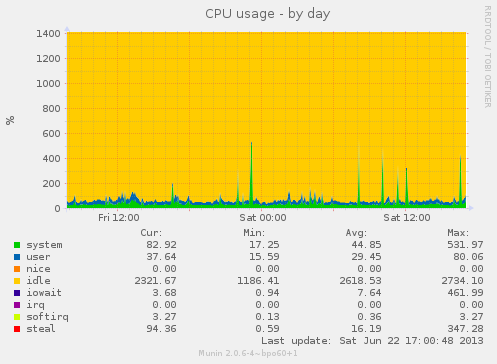

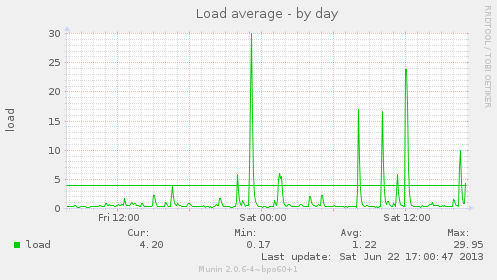

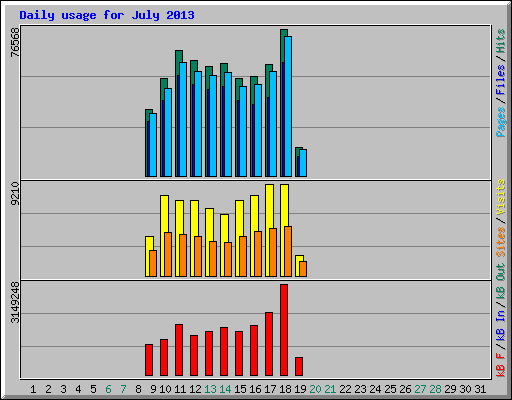

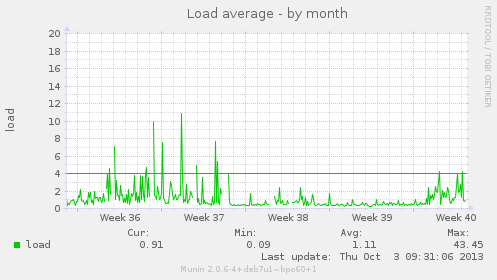

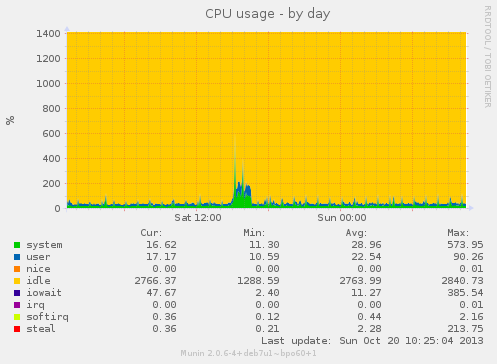

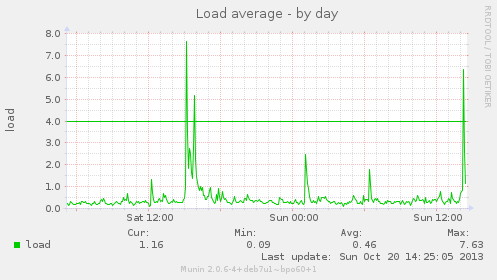

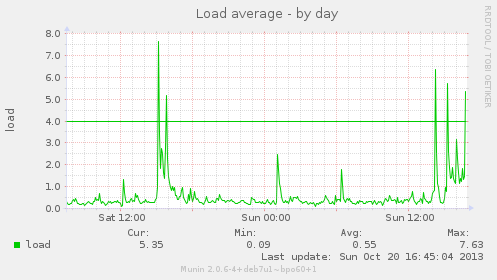

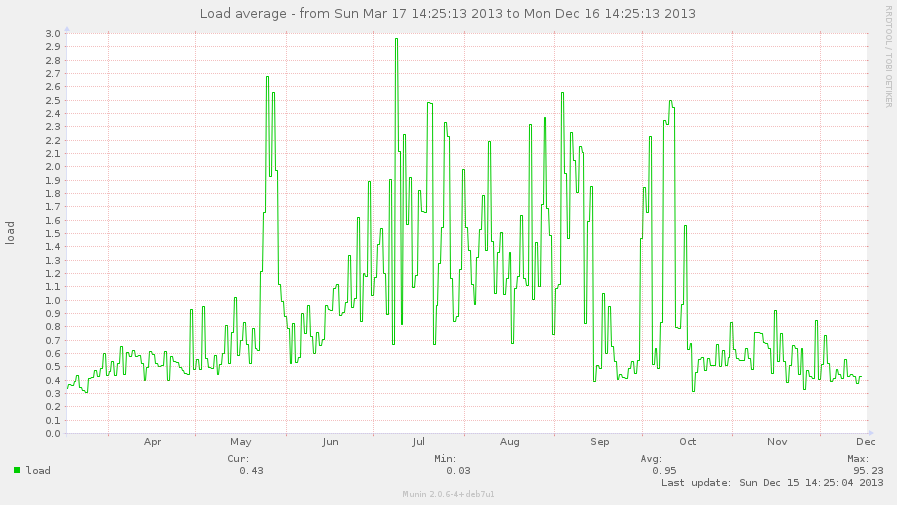

There is a 5 days worth of Munin stats, attached, from March which show it wasn't an issue then, max load 1.88.

I have edited /etc/logrotate.d/lfd so we will keep a years worth of lfd logs rather than a weeks worth.

Changed 3 years ago by chris

- Attachment puffin-load-week-2013-03.png added

Puffin load from March 2013

comment:6 in reply to: ↑ 2 ; follow-up: ↓ 8 Changed 3 years ago by chris

Replying to jim:

On Babylon (my system) it started on the 21st

How much further back than that do you have logs for?

comment:7 Changed 3 years ago by jim

Nothing on bugs.php.net, so it must be a related sub-package...

I've now scanned my other logs and nothing jumps out... And I note the high load for me usually happens around 4.20am except for one entry.

I now think that Puffin is not necessarily suffering the same issue as Babylon...

I note one thing though -- on the update for the 20th I did, the Barracuda email sent telling me it was successful said this near the bottom:

Barracuda [Mon May 20 22:27:27 BST 2013] ==> ALRT: Your OS kernel has been upgraded! Barracuda [Mon May 20 22:27:27 BST 2013] ==> ALRT: You *must* reboot immediately to make it active and stay secure!

I did reboot after that...

Anyway, that's all the analysis of Babylon I can/will do for now... I'll do some Googling for similar symptoms around:

- NginX 1.5.0

- PHP 5.3.25 (and related)

- MariaDB 5.5.31.

comment:8 in reply to: ↑ 6 Changed 3 years ago by jim

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 1.0 to 1.25

Replying to chris:

How much further back than that do you have logs for?

I have all the alert emails since the dawn of (Babylon) time, but there's nothing before 21th that's suspect. Got GZipped logs that go back too, but again nothing in them before (or after TBH) 20th that looks interesting.

Looking at the Puffin email list, I'd chalk the occasional ~6 load down to a burst of traffic, but definitely a cluster around 11th that's very suspect -- does that coincide with updates?

Last word on Babylon: in case you want to compare, my CGP is here cgp.aegir.i-jk.co.uk - and its def not a Kernel thing, as I'm on 3.9...

Got to go out now, will look at this again tonight.

comment:9 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 1.25 to 1.5

There was just another load spike, but this time nginx and php53-fpm didn't stop running, lfd email:

Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 34.49 Time: Wed May 29 15:43:38 2013 +0100 1 Min Load Avg: 80.66 5 Min Load Avg: 34.49 15 Min Load Avg: 13.19 Running/Total Processes: 90/311

Pingdom reported:

www.transitionnetwork.org is down since 29/05/2013 15:40:57.

Nagios alert (these are the ones that go direct to my phone):

Notification Type: PROBLEM Service: HTTP Host: puffin Address: puffin.webarch.net State: CRITICAL Date/Time: Wed May 29 15:45:07 BST 2013 Additional Info: Connection refused

Pingdom:

www.transitionnetwork.org is UP again at 29/05/2013 15:51:57, after 11m of downtime.

And nagios:

Notification Type: RECOVERY Service: HTTP Host: puffin Address: puffin.webarch.net State: OK Date/Time: Wed May 29 15:55:07 BST 2013 Additional Info: HTTP OK: HTTP/1.1 200 OK - 692 bytes in 0.005 second response time

comment:10 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 1.5 to 1.75

The site was down again for 5 mins last night, I have set Munin to send me a email if the load goes over 4, at 30 May 2013 04:05:13 I was sent:

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

CRITICALs: load is 10.97 (outside range [:4]).

Then from pingdom at 30 May 2013 04:06:02 +0100:

www.transitionnetwork.org is down since 30/05/2013 04:01:57.

And from pingdom when it came back up, 30 May 2013 04:07:04 +0100:

www.transitionnetwork.org is UP again at 30/05/2013 04:06:57, after 5m of downtime.

From munin, 30 May 2013 04:10:15 +0100:

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

CRITICALs: load is 4.08 (outside range [:4]).

And munin again, 30 May 2013 04:15:12 +0100:

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

OKs: load is 1.81.

Again I can't see anything in the logs to indicate the cause of this.

comment:11 Changed 3 years ago by chris

Actually 5 mins before the above happened there was this email from lfd:

Date: Thu, 30 May 2013 04:01:42 +0100 (BST) Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 21.26 Time: Thu May 30 04:01:41 2013 +0100 1 Min Load Avg: 65.70 5 Min Load Avg: 21.26 15 Min Load Avg: 7.69 Running/Total Processes: 25/331

And this coincides with these errors in /var/log/syslog:

May 30 04:01:43 puffin mysqld: 130530 4:01:43 [Warning] Aborted connection 1011234 to db: 'transitionnetwor' user: 'transitionnetwor' host: 'localhost' (Unknown error) May 30 04:01:43 puffin mysqld: 130530 4:01:43 [Warning] Aborted connection 1011237 to db: 'transitionnetwor' user: 'transitionnetwor' host: 'localhost' (Unknown error) May 30 04:01:43 puffin mysqld: 130530 4:01:43 [Warning] Aborted connection 1011247 to db: 'transitionnetwor' user: 'transitionnetwor' host: 'localhost' (Unknown error) May 30 04:01:43 puffin mysqld: 130530 4:01:43 [Warning] Aborted connection 1011260 to db: 'transitionnetwor' user: 'transitionnetwor' host: 'localhost' (Unknown error) May 30 04:01:43 puffin mysqld: 130530 4:01:43 [Warning] Aborted connection 1011239 to db: 'transitionnetwor' user: 'transitionnetwor' host: 'localhost' (Unknown error) May 30 04:01:43 puffin mysqld: 130530 4:01:43 [Warning] Aborted connection 1011229 to db: 'transitionnetwor' user: 'transitionnetwor' host: 'localhost' (Unknown error) May 30 04:01:43 puffin mysqld: 130530 4:01:43 [Warning] Aborted connection 1011240 to db: 'transitionnetwor' user: 'transitionnetwor' host: 'localhost' (Unknown error) May 30 04:01:43 puffin mysqld: 130530 4:01:43 [Warning] Aborted connection 1011231 to db: 'transitionnetwor' user: 'transitionnetwor' host: 'localhost' (Unknown error) May 30 04:01:43 puffin mysqld: 130530 4:01:43 [Warning] Aborted connection 1011261 to db: 'transitionnetwor' user: 'transitionnetwor' host: 'localhost' (Unknown error) May 30 04:01:43 puffin mysqld: 130530 4:01:43 [Warning] Aborted connection 1011238 to db: 'transitionnetwor' user: 'transitionnetwor' host: 'localhost' (Unknown error) May 30 04:01:43 puffin mysqld: 130530 4:01:43 [Warning] Aborted connection 1011232 to db: 'transitionnetwor' user: 'transitionnetwor' host: 'localhost' (Unknown error)

I don't know if the high load caused the mysql problem or if the mysql problem was caused by the high load.

Changed 3 years ago by chris

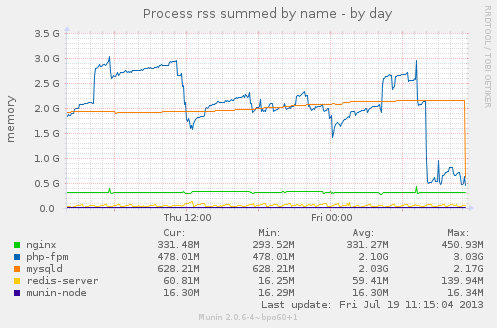

- Attachment puffin-multips_memory-month-2013-05-31.png added

Puffin memory usage by selected application

comment:12 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 1.75 to 2.0

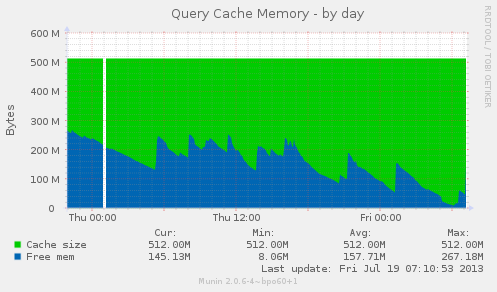

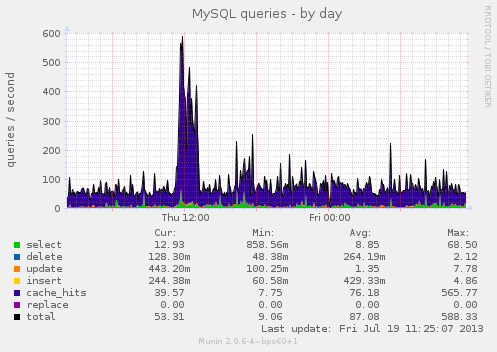

The memory usage of MariaDB/MySQL is still going up and has now hit 2G, half the physical RAM, I don't know if this is related to the load spikes and downtime:

I have installed some additional MySQL munin plugins to get some better stats:

cd /etc/munin/plugins ln -s /usr/share/munin/plugins/mysql_ mysql_bin_relay_log ln -s /usr/share/munin/plugins/mysql_ mysql_commands ln -s /usr/share/munin/plugins/mysql_ mysql_connections ln -s /usr/share/munin/plugins/mysql_ mysql_files_tables ln -s /usr/share/munin/plugins/mysql_ mysql_innodb_bpool ln -s /usr/share/munin/plugins/mysql_ mysql_innodb_bpool_act ln -s /usr/share/munin/plugins/mysql_ mysql_innodb_insert_buf ln -s /usr/share/munin/plugins/mysql_ mysql_innodb_io ln -s /usr/share/munin/plugins/mysql_ mysql_innodb_io_pend ln -s /usr/share/munin/plugins/mysql_ mysql_innodb_log ln -s /usr/share/munin/plugins/mysql_ mysql_innodb_rows ln -s /usr/share/munin/plugins/mysql_ mysql_innodb_semaphores ln -s /usr/share/munin/plugins/mysql_ mysql_innodb_tnx ln -s /usr/share/munin/plugins/mysql_ mysql_myisam_indexes ln -s /usr/share/munin/plugins/mysql_ mysql_network_traffic ln -s /usr/share/munin/plugins/mysql_ mysql_qcache ln -s /usr/share/munin/plugins/mysql_ mysql_qcache_mem ln -s /usr/share/munin/plugins/mysql_ mysql_select_types ln -s /usr/share/munin/plugins/mysql_ mysql_slow ln -s /usr/share/munin/plugins/mysql_ mysql_sorts ln -s /usr/share/munin/plugins/mysql_ mysql_table_locks ln -s /usr/share/munin/plugins/mysql_ mysql_tmp_tables

comment:13 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 2.0 to 2.25

Those munin plugins didn't work due to this bug: http://munin-monitoring.org/ticket/1302

So I have installed this one: https://github.com/kjellm/munin-mysql

comment:14 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 2.25 to 2.5

The install steps for the munin plugin which is generating graphs here, https://penguin.transitionnetwork.org/munin/transitionnetwork.org/puffin.transitionnetwork.org/index.html#mysql

cd /usr/local/src wget https://github.com/kjellm/munin-mysql/archive/master.zip unzip master.zip cd munin-mysql-master

I then needed to add this to /etc/munin/plugin-conf.d/munin-node as I could get it to work using the debian-sys-maint account:

[mysql] env.mysqlconnection DBI:mysql:mysql;host=127.0.0.1;port=3306 env.mysqluser root env.mysqlpassword XXX

comment:15 Changed 3 years ago by jim

- Add Hours to Ticket changed from 0.0 to 0.1

- Total Hours changed from 2.5 to 2.6

We could use a little more debugging too, so I've also set MySQL to log slow queries by uncommenting these lines in /etc/mysql/my.cnf @ line 57:

slow_query_log = 1 long_query_time = 5 slow_query_log_file = /var/log/mysql/sql-slow-query.log

I also set long_query_time to 5 seconds from 10.

Chris, please restart MySQL at your leisure to enable this logging to /var/log/mysql/sql-slow-query.log. It'll be interesting to see if there's a pattern of table locks etc that cause this.

comment:16 Changed 3 years ago by jim

- Add Hours to Ticket changed from 0.0 to 0.05

- Total Hours changed from 2.6 to 2.65

FYI the only differences in my.cnf between Babylon and Puffin are puffin has higher values for innodb_buffer_pool_size and key_buffer_size, and Babylon has skip-name-resolve commented out, while Puffin does not.

comment:17 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 2.65 to 2.9

The old mysql munin plugins had stopped working after I fixed the new ones last night, I have now got them all working this config did the trick in /etc/munin/plugin-conf.d/munin-node:

[mysql*] user root env.mysqlopts --defaults-file=/etc/mysql/debian.cnf env.mysqluser debian-sys-maint env.mysqlconnection DBI:mysql:mysql;mysql_read_default_file=/etc/mysql/debian.cnf

comment:18 Changed 3 years ago by chris

Seems like I spoke too soon regarding the old munin mysql plugins, the work on the command line:

sudo -i cd /etc/munin/plugins munin-run mysql_bytes recv.value 33653453213 sent.value 687336777447 munin-run mysql_queries delete.value 464002 insert.value 451873 replace.value 0 select.value 11268953 update.value 902061 cache_hits.value 210825250 munin-run mysql_slowqueries queries.value 59 munin-run mysql_threads threads.value 1

But they are not producing graphs and I don't understand why.

comment:19 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 2.9 to 3.15

Munin stats are working now, it looks like all I forgot to do this morning was restart munin-node, we now have stats here again:

I have also restarted MariaDB as requested by Jim on ticket:555#comment:15

comment:20 follow-up: ↓ 21 Changed 3 years ago by jim

- Add Hours to Ticket changed from 0.0 to 0.1

- Total Hours changed from 3.15 to 3.25

FYI /var/log/mysql/sql-slow-query.log is rotated every few hours too... So I've logged in and done this:

screen tail -F /var/log/mysql/sql-slow-query.log > ~/jk_screen_sql_slow.log

So this way we won't miss anything... please ignore the screen session, I'll log in and kill it in a few days. (there might well be a more efficient way?)

comment:21 in reply to: ↑ 20 ; follow-up: ↓ 25 Changed 3 years ago by chris

Replying to jim:

there might well be a more efficient way?

The clobbering of logs by BOA is, IMHO, horrible and for us totally unnecessary.

There are tools for log rotation and compression in debian and I'd much rather use these.

BOA should at least give user a option to switch their log clobbering off via a configuration variable.

I'd be happy to find all the BOA scripts that do the log clobbering and comment out these parts and document which scripts need amending on each BOA upgrade and also to raise a ticket with BOA to ask them to allow users to switch off the clobbering.

comment:22 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.67

- Total Hours changed from 3.25 to 3.92

These are all the logs in /var/log/ which have been clobbered:

mysql/sql-slow-query.log nginx/speed_purge.log.1 php/error_log_53 php/error_log_52 php/php53-fpm-error.log php/php53-fpm-error.log php/php-fpm-slow.log php/php-fpm-error.log php/error_log_cli_52 php/php53-fpm-slow.log php/error_log_cli_53 redis/redis-server.log

While looking at these I did notice these lines in php/php53-fpm-error.log (between the clobberings data is still written to the log files) which potentially need some action, these entries about the front page taking over 30 seconds to generate:

[06-Jun-2013 10:21:32] WARNING: [pool www] child 6037, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (35.152610 sec), logging [06-Jun-2013 10:23:04] WARNING: [pool www] child 15643, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (33.938562 sec), logging [06-Jun-2013 11:00:33] WARNING: [pool www] child 62121, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "POST /index.php") executing too slow (30.960707 sec), logging

And this indicating that we need more php-fpm processes:

[06-Jun-2013 11:00:39] WARNING: [pool www] server reached pm.max_children setting (10), consider raising it

The two scripts doing the clobbering are /var/xdrago/clear.sh and graceful.sh and these are the logs they are set to set to clobber:

clear.sh:echo rotate > /var/log/php/php-fpm-error.log clear.sh:echo rotate > /var/log/php/php-fpm-slow.log clear.sh:echo rotate > /var/log/php/php53-fpm-error.log clear.sh:echo rotate > /var/log/php/php53-fpm-slow.log clear.sh:echo rotate > /var/log/php/error_log_52 clear.sh:echo rotate > /var/log/php/error_log_53 clear.sh:echo rotate > /var/log/php/error_log_cli_52 clear.sh:echo rotate > /var/log/php/error_log_cli_53 clear.sh:echo rotate > /var/log/redis/redis-server.log clear.sh:echo rotate > /var/log/mysql/sql-slow-query.log clear.sh: echo rotate > /var/log/nginx/access.log graceful.sh: echo rotate > /var/log/nginx/speed_purge.log graceful.sh: echo rotate > /var/log/newrelic/nrsysmond.log graceful.sh: echo rotate > /var/log/newrelic/php_agent.log graceful.sh: echo rotate > /var/log/newrelic/newrelic-daemon.log

I have edited them in vim:

vim /var/xdrago/graceful.sh /var/xdrago/clear.sh

And run this regular expression on them to comment out the lines doing the clobbering:

:1,$s/echo rotate/# echo rotate/gc

I have updated the BOA update notes to include this step, wiki:PuffinServer#UpgradingBOA.

I'm not sure where BOA is setting the max number of php-fpm processes to 10, in /etc/php5 it is set to 12:

grep -r "pm.max_children" /etc/php5/ | grep -v ";" /etc/php5/fpm/pool.d/www.conf:pm.max_children = 12

I guess this is overridden somewhere so I'm doing some grepping to try to find out where.

comment:23 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.2

- Total Hours changed from 3.92 to 4.12

According to this BOA ticket, https://drupal.org/node/1711596 pm.max_children is set in /etc/php5

These are the key settings in /etc/php5/fpm/pool.d/www.conf:

pm.max_children = 12 pm.max_spare_servers = 3 pm.min_spare_servers = 2 pm.start_servers = 3

Perhaps min_spare_servers is deducted from max_children to get the number 10?

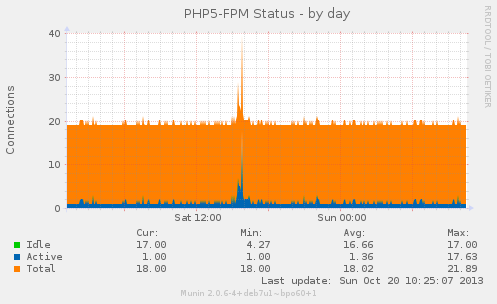

Each php-fpm process uses around 100MB of RAM, see:

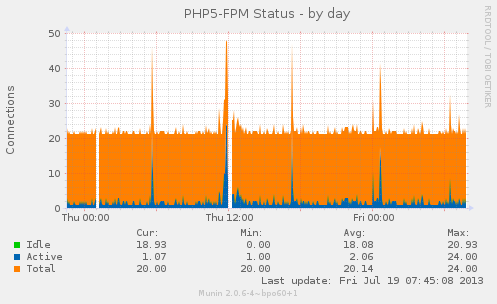

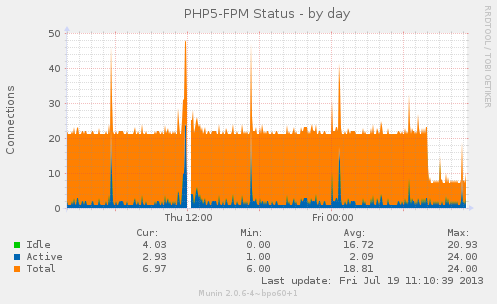

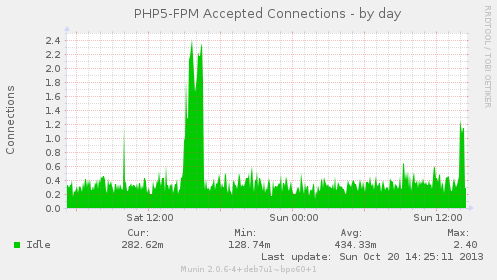

So I think it's probably safe to increase this to 16, or more, as it only spikes above 7 a few times a day, see:

I have changed it to 16 and restarted php53-fpm.

The grep processes are still running, I expect they won't return anything, I'll keep an eye on /var/log/php/php53-fpm-error.log to see what is reported.

comment:24 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.1

- Total Hours changed from 4.12 to 4.22

Interesting comment here on php-fpm settings:

The number of fpm children should be the number of children that you need. As a starting point

you want generally at least as many as CPUs as you have, so maybe 1 or 2 or 4 depending on

your computer, plus 2 or 3 more for when a child is waiting on something like a database

backend. But that is only a general rule. If your child processes are blocking for long

periods of time for something, like your php script is retrieving something offsite, you might

want more. With just Drupal accessing a database, you don't need that many extra.

We have 14 CPUs so setting it to 16 seems reasonable.

The grepping has finished and it's clear that the pm.max_children variable is set in /etc/php5/fpm/pool.d/www.conf

comment:25 in reply to: ↑ 21 ; follow-up: ↓ 26 Changed 3 years ago by jim

Replying to chris:

The clobbering of logs by BOA is, IMHO, horrible and for us totally unnecessary. There are tools for log rotation and compression in debian and I'd much rather use these.

BOA should at least give user a option to switch their log clobbering off via a configuration variable.

I completely agree.

You should consider raising a ticket on the Barracuda issue queue, since we're all part of the OS project now... The log rotation is only really useful for servers getting dozens+ hits per second. And as you say, there are better ways.

---

Regarding PHP-FPM workers etc, this landed a few days ago: http://drupalcode.org/project/barracuda.git/commit/65da24cf162f588932a8b9ee140a028a2a7ea869

Also FYI NginX 1.5.1 is included now, so the next up-stable system should grab this.

comment:26 in reply to: ↑ 25 ; follow-up: ↓ 35 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.8

- Total Hours changed from 4.22 to 5.02

Replying to jim:

Replying to chris:

BOA should at least give user a option to switch their log clobbering off via a configuration variable.

I completely agree.

You should consider raising a ticket on the Barracuda issue queue

Thanks, I have posted the following at https://drupal.org/node/2013631

BOA Log Clobbering

By default BOA clobbers several log files, this cron job:

11 * * * * bash /var/xdrago/clear.sh >/dev/null 2>&1

Clobbers these logs:

grep "echo rotate" /var/xdrago/clear.sh echo rotate > /var/log/php/php-fpm-error.log echo rotate > /var/log/php/php-fpm-slow.log echo rotate > /var/log/php/php53-fpm-error.log echo rotate > /var/log/php/php53-fpm-slow.log echo rotate > /var/log/php/error_log_52 echo rotate > /var/log/php/error_log_53 echo rotate > /var/log/php/error_log_cli_52 echo rotate > /var/log/php/error_log_cli_53 echo rotate > /var/log/redis/redis-server.log echo rotate > /var/log/mysql/sql-slow-query.log echo rotate > /var/log/nginx/access.log

And this cron job:

18 0 * * * bash /var/xdrago/graceful.sh >/dev/null 2>&1

Clobbers these logs:

grep "echo rotate" /var/xdrago/graceful.sh echo rotate > /var/log/nginx/speed_purge.log echo rotate > /var/log/newrelic/nrsysmond.log echo rotate > /var/log/newrelic/php_agent.log echo rotate > /var/log/newrelic/newrelic-daemon.log

On servers where there isn't a problem with disk space it would be nice if there was an option to disable this clobbering and rely on the distribution log rotation scripts as there is potentially useful information that is lost when the logs are clobbered.

---

Regarding PHP-FPM workers etc, this landed a few days ago: http://drupalcode.org/project/barracuda.git/commit/65da24cf162f588932a8b9ee140a028a2a7ea869

Thanks for that, I have now found the config file with 10 in it:

grep -r "pm.max_children" /opt /opt/local/etc/php53-fpm.conf:; static - a fixed number (pm.max_children) of child processes; /opt/local/etc/php53-fpm.conf:; pm.max_children - the maximum number of children that can /opt/local/etc/php53-fpm.conf:; pm.max_children - the maximum number of children that /opt/local/etc/php53-fpm.conf:pm.max_children = 10

That file contains:

process.max = 12 pm.max_children = 10 pm.start_servers = 6 pm.min_spare_servers = 1 pm.max_spare_servers = 6

I changed these values:

process.max = 20 pm.max_children = 16

And restarted php-fpm53 and soon after got this in the error logs:

[06-Jun-2013 14:00:28] WARNING: [pool www] seems busy (you may need to increase pm.start_servers, or pm.min/max_spare_servers), spawning 8 children, there are 0 idle, and 13 total children

So I have also changed this:

pm.min_spare_servers = 4

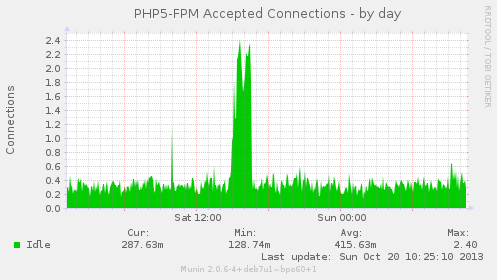

And I have updated the BOA update notes to mention these edits, wiki:PuffinServer#UpgradingBOA and we should keep an eye on these graphs to see what the result is:

- https://penguin.transitionnetwork.org/munin/transitionnetwork.org/puffin.transitionnetwork.org/phpfpm_processes.html

- https://penguin.transitionnetwork.org/munin/transitionnetwork.org/puffin.transitionnetwork.org/phpfpm_memory.html

Also FYI NginX 1.5.1 is included now, so the next up-stable system should grab this.

Interesting, I wonder where BOA gets Nginx from these days, dotdeb only has 1.4 and I though we were getting it from there:

But we can't be:

nginx -v nginx version: nginx/1.5.0

In fact it doesn't appear to be installed using aptitude at all:

aptitude search nginx p nginx - small, powerful, scalable web/proxy server c nginx-common - small, powerful, scalable web/proxy server - common files p nginx-dbg - Debugging symbols for nginx p nginx-doc - small, powerful, scalable web/proxy server - documentation p nginx-extras - nginx web/proxy server (extended version) p nginx-extras-dbg - nginx web/proxy server (extended version) - debugging symbols p nginx-full - nginx web/proxy server (standard version) p nginx-full-dbg - nginx web/proxy server (standard version) - debugging symbols p nginx-light - nginx web/proxy server (basic version) p nginx-light-dbg - nginx web/proxy server (basic version) - debugging symbols p nginx-naxsi - nginx web/proxy server (version with naxsi) p nginx-naxsi-dbg - nginx web/proxy server (version with naxsi) - debugging symbols p nginx-naxsi-ui - nginx web/proxy server - naxsi configuration front-end p nginx-passenger - nginx web/proxy server (Passenger version) p nginx-passenger-dbg - nginx web/proxy server (Passenger version) - debugging symbols

comment:27 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 5.02 to 5.27

Still getting some errors in /var/log/php/php53-fpm-error.log:

[06-Jun-2013 16:32:18] WARNING: [pool www] seems busy (you may need to increase pm.start_servers, or pm.min/max_spare_servers), spawning 8 children, there are 3 idle, and 13 total children [06-Jun-2013 19:00:06] WARNING: [pool www] seems busy (you may need to increase pm.start_servers, or pm.min/max_spare_servers), spawning 8 children, there are 2 idle, and 10 total children [06-Jun-2013 19:40:09] WARNING: [pool www] seems busy (you may need to increase pm.start_servers, or pm.min/max_spare_servers), spawning 8 children, there are 3 idle, and 11 total children [06-Jun-2013 20:00:32] WARNING: [pool www] server reached pm.max_children setting (16), consider raising it

And looking at the graphs here:

- https://penguin.transitionnetwork.org/munin/transitionnetwork.org/puffin.transitionnetwork.org/phpfpm_average.html

- https://penguin.transitionnetwork.org/munin/transitionnetwork.org/puffin.transitionnetwork.org/phpfpm_memory.html

The average memory usage is now less even though the peaks are higher, so I have edited /opt/local/etc/php53-fpm.conf and changed:

process.max = 30 pm.max_children = 24 pm.start_servers = 8 pm.min_spare_servers = 6 pm.max_spare_servers = 12

Not sure if these are optimal, will check the log again tomorrow.

comment:28 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 5.27 to 5.52

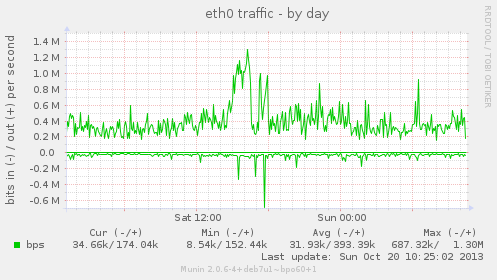

We hit the php-fpm limit again this morning, perhaps related to the fact that the newsletter went out this morning as well, the Nginx requests per second are higher than average (at 2.42/sec compared with 1.38/sec) at the moment:

[07-Jun-2013 08:00:50] WARNING: [pool www] server reached pm.max_children setting (24), consider raising it

I have edited /opt/local/etc/php53-fpm.conf with the view to reduce the general php-pfm memory usage but to allow it to spike higher:

process.max = 40 pm.max_children = 36 pm.start_servers = 6 pm.min_spare_servers = 4 pm.max_spare_servers = 10

Since we only have one pool process.max doesn't need to be greater than pm.max_children.

I'm not convinced that this needs to be set so low as we don't have any evidence for memory leaks, we should perhaps try increasing it by a factor of 10 to reduce the number of times php-fpm processed have to be killed and restarted:

; The number of requests each child process should execute before respawning. ; This can be useful to work around memory leaks in 3rd party libraries. For ; endless request processing specify '0'. Equivalent to PHP_FCGI_MAX_REQUESTS. ; Default Value: 0 pm.max_requests = 500

comment:29 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 5.52 to 5.77

The max php-fpm limit was hit again:

[09-Jun-2013 08:05:24] WARNING: [pool www] server reached pm.max_children setting (36), consider raising it

The context for this limit being hit:

[09-Jun-2013 08:05:06] WARNING: [pool www] seems busy (you may need to increase pm.start_servers, or pm.min/max_spare_servers), spawning 8 children, there are 0 idle, and 20 total children [09-Jun-2013 08:05:07] WARNING: [pool www] seems busy (you may need to increase pm.start_servers, or pm.min/max_spare_servers), spawning 16 children, there are 0 idle, and 24 total children [09-Jun-2013 08:05:08] WARNING: [pool www] seems busy (you may need to increase pm.start_servers, or pm.min/max_spare_servers), spawning 32 children, there are 0 idle, and 28 total children [09-Jun-2013 08:05:09] WARNING: [pool www] seems busy (you may need to increase pm.start_servers, or pm.min/max_spare_servers), spawning 32 children, there are 3 idle, and 32 total children [09-Jun-2013 08:05:24] WARNING: [pool www] server reached pm.max_children setting (36), consider raising it [09-Jun-2013 08:05:33] WARNING: [pool www] child 26743, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (32.894680 sec), logging [09-Jun-2013 08:05:33] WARNING: [pool www] child 26330, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (32.119437 sec), logging [09-Jun-2013 08:05:33] WARNING: [pool www] child 26329, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (31.277767 sec), logging [09-Jun-2013 08:05:33] WARNING: [pool www] child 26328, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (32.266466 sec), logging [09-Jun-2013 08:05:33] WARNING: [pool www] child 26082, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (30.955183 sec), logging [09-Jun-2013 08:05:33] WARNING: [pool www] child 26066, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (30.320056 sec), logging [09-Jun-2013 08:05:33] WARNING: [pool www] child 11592, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (30.421503 sec), logging [09-Jun-2013 08:05:33] WARNING: [pool www] child 11590, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (32.509934 sec), logging [09-Jun-2013 08:05:33] WARNING: [pool www] child 11589, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (30.739996 sec), logging [09-Jun-2013 08:05:33] WARNING: [pool www] child 11586, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (32.881669 sec), logging [09-Jun-2013 08:05:33] WARNING: [pool www] child 10656, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (32.704514 sec), logging

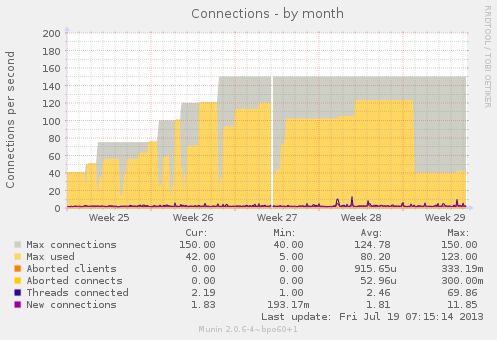

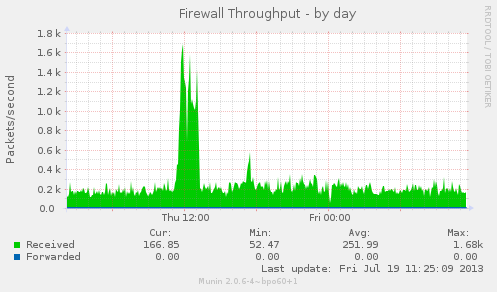

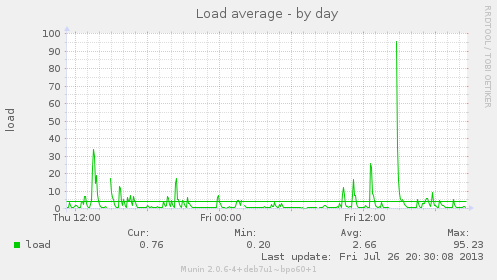

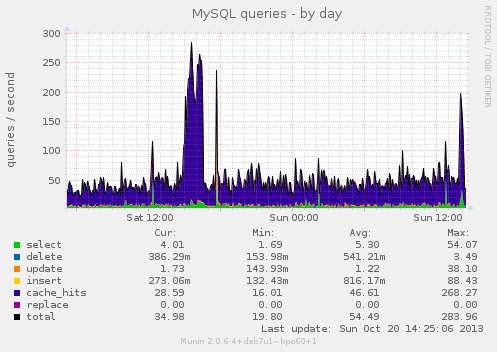

Looking at this graph it looks like we might have also hit the max number of mysql connections:

So these values have been edited in /etc/mysql/my.cnf, they were set at 30:

max_connections = 40 max_user_connections = 40

And mysql was restarted.

And /opt/local/etc/php53-fpm.conf was edited:

process.max = 50 pm.max_children = 42 pm.max_spare_servers = 8

And php53-fpm restarted.

comment:30 Changed 3 years ago by ed

My understanding of the BOA rig was that it could withstand a slashdotting. This ticket is making me increasingy nervous - particularly as we are about to have articles in Guardian and Daily Mail, and there is about to be a sustained online PR campaign aroudn Rob's book from now all across July.

comment:31 follow-up: ↓ 32 Changed 3 years ago by jim

- Add Hours to Ticket changed from 0.0 to 0.25

- Total Hours changed from 5.77 to 6.02

Hi Ed, this is not a question of a 'slashdotting' -- the server can handle that, that workload is proven to be ok.

This is about a busy site on a server, which - due to either a misconfiguration, a database issue or something wrong with our current versions of the web stack packages (PHP, MySQL etc) - has less capacity than is expected.

It's clear from Chris and my examinations that the PHP-FPM processes are being used up because something is making them hang for 30s+. I have some suspicions but nothing more, as has Chris.

My current theory is this: something is holding/blocking some requests, and they stack up behind this. I see this on my machine ONLY at 4.20 in the morning when some scripts backup/daily tasks are running. Puffin is getting this more and at other times, so it's either not the same issue, or not the same trigger.

My hunch is either:

- our versions of PHP, MySQL, NGINX or other related stuff has a bug that's blocking/locking things

- Our tn.org Drupal database as some issue around the mixed MyISAM/INNODB setup that is causing locks (this is true on my sites, which could explain my 4.20am load warning)

- Puffin has some other issue not present on Babylon.

The danger is that if we have DB locking, and therefore lots of PHP processes queued up/blocking on that IO, that simply adding more workers over and above the BOA standard quantities risks eating all the memory and knocking the server over.

Anyway, I will continue to look into this on both Puffin and Babylon. It's telling that there are no issues raise on the Barracuda issue list like this, and it's used by thousands. So for now I think it could be a system- or Drupal-level issue.

More as it happens...

comment:32 in reply to: ↑ 31 Changed 3 years ago by chris

Replying to jim:

The danger is that if we have DB locking, and therefore lots of PHP processes queued up/blocking on that IO, that simply adding more workers over and above the BOA standard quantities risks eating all the memory and knocking the server over.

Yes, I have also been very concerned about this and have been keeping a very close eye on the memory usage and swap:

- https://penguin.transitionnetwork.org/munin/transitionnetwork.org/puffin.transitionnetwork.org/multips_memory.html

- https://penguin.transitionnetwork.org/munin/transitionnetwork.org/puffin.transitionnetwork.org/memory.html

- https://penguin.transitionnetwork.org/munin/transitionnetwork.org/puffin.transitionnetwork.org/swap.html

The php-fpm and mysql process limits have been increased slowly and with this concern very much in mind.

There is also an emergency plan if the shit really does hit the fan in a massive way, there is 48GB of RAM sitting on my desk which can be added to the server ;-) In other words Ed -- don't worry too much, the site isn't going to go down if we can help it :-)

comment:33 Changed 3 years ago by ed

nice, ta

comment:34 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.5

- Total Hours changed from 6.02 to 6.52

Jim, have you taken a look at the /var/log/php/php53-fpm-slow.log -- now it is no longer being clobbered there is quite a lot of info here, which perhaps might help, every time a request takes more than 30 seconds and is logged in /var/log/php/php53-fpm-error.log:, eg this is the last one:

[10-Jun-2013 10:39:30] WARNING: [pool www] child 47463, script '/data/disk/tn/static/transition-network-d6-004/index.php' (request: "GET /index.php") executing too slow (38.153063 sec), logging [10-Jun-2013 10:39:30] NOTICE: child 47463 stopped for tracing [10-Jun-2013 10:39:30] NOTICE: about to trace 47463 [10-Jun-2013 10:39:30] NOTICE: finished trace of 47463

There is a corresponding entry in the slowlog:

[10-Jun-2013 10:39:30] [pool www] pid 47463 script_filename = /data/disk/tn/static/transition-network-d6-004/index.php [0x0000000002686fb8] fsockopen() /data/disk/tn/static/transition-network-d6-004/includes/common.inc:475 [0x0000000002684190] drupal_http_request() /data/disk/tn/static/transition-network-d6-004/sites/all/modules/contrib/image_resize_filter/image_resize_filter.module:366 [0x0000000002683c38] image_resize_filter_get_images() /data/disk/tn/static/transition-network-d6-004/sites/all/modules/contrib/image_resize_filter/image_resize_filter.module:59 [0x00007fff91780a00] image_resize_filter_filter() unknown:0 [0x0000000002683910] call_user_func_array() /data/disk/tn/static/transition-network-d6-004/includes/module.inc:532 [0x0000000002683178] module_invoke() /data/disk/tn/static/transition-network-d6-004/modules/filter/filter.module:455 [0x0000000002682d80] check_markup() /data/disk/tn/static/transition-network-d6-004/modules/node/node.module:1058 [0x0000000002682a48] node_prepare() /data/disk/tn/static/transition-network-d6-004/modules/node/node.module:1102 [0x0000000002682698] node_build_content() /data/disk/tn/static/transition-network-d6-004/modules/node/node.module:1023 [0x0000000002682348] node_view() /data/disk/tn/static/transition-network-d6-004/modules/node/node.module:1118 [0x00000000026821d8] node_show() /data/disk/tn/static/transition-network-d6-004/modules/node/node.module:1814 [0x0000000002681c00] node_page_view() /data/disk/tn/static/transition-network-d6-004/sites/all/modules/contrib/ctools/page_manager/plugins/tasks/node_view.inc:107 [0x00007fff91781510] page_manager_node_view() unknown:0 [0x0000000002681828] call_user_func_array() /data/disk/tn/static/transition-network-d6-004/includes/menu.inc:360 [0x00000000026814d8] menu_execute_active_handler() /data/disk/tn/static/transition-network-d6-004/index.php:17

However, reading through this log I can't see any really obvious patterns... but we might be getting fewer than we were, but Sunday is always a slow day for the site (there were 17 yesterday, Sunday, and 84 the day before and 52 the day before that, the first full day we have logs for):

grep "stopped for tracing" /var/log/php/php53-fpm-error.log | grep 09-Jun-2013 | wc -l 17 grep "stopped for tracing" /var/log/php/php53-fpm-error.log | grep 08-Jun-2013 | wc -l 84 grep "stopped for tracing" /var/log/php/php53-fpm-error.log | grep 07-Jun-2013 | wc -l 52

There have been 6 so far today.

comment:35 in reply to: ↑ 26 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.71

- Total Hours changed from 6.52 to 7.23

Replying to chris:

Replying to jim:

Replying to chris:

BOA should at least give user a option to switch their log clobbering off via a configuration variable.

I completely agree.

You should consider raising a ticket on the Barracuda issue queue

Thanks, I have posted the following at https://drupal.org/node/2013631

The ticket has been closed (won't fix):

These logs have no configured logrotate scripts, so we just wipe them out. We

do this also because on fast enough system with SSD it is possible to quickly

fill the disk with logs if there is something which keeps generating errors. We

have seen servers crashed because of this, hence this aggressive procedure.

I don't consider it worth adding extra logrotate scripts since any really

useful errors you can find in the syslog anyway, but feel free to disagree and

submit patch for review and re-open.

Also, you seems to use really old BOA version, because we don't purge Nginx

access log, unless there is /root/.high_traffic.cnf control file.

So, it doesn't appear to be worth following this up further with the BOA people.

These are the logs were are not rotated and which are of a size that makes them worth rotating:

80K /var/log/mysql/sql-slow-query.log 120K /var/log/php/php53-fpm-error.log 218K /var/log/php/php53-fpm-slow.log 113M /var/log/php/www.access.log

I have edited /etc/logrotate.d/mysql-server and commented out the rotation of logs that MariaDB doesn't create and replacing it with the slow query log and also increased the number of days to keep logs from 7 to 30:

#/var/log/mysql.log /var/log/mysql/mysql.log /var/log/mysql/mysql-slow.log {

/var/log/mysql/sql-slow-query.log {

rotate 30

I have copied the nginx logrotate script to /etc/logrotate.d/php-fpm and edited it to:

/var/log/php/*.log {

daily

missingok

rotate 30

compress

delaycompress

notifempty

create 0640 www-data adm

sharedscripts

postrotate

[ ! -f /var/run/php53-fpm.pid ] || kill -USR1 `cat /var/run/php53-fpm.pid`

endscript

}

The scripts were then manually run to test them:

logrotate -vf /etc/logrotate.d/mysql-server logrotate -vf /etc/logrotate.d/php-fpm

comment:36 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.7

- Total Hours changed from 7.23 to 7.93

There was another period of downtime last night at around 10 pm for 8 mins , with a load peak of over 80, I can't see any indication in the logs for what caused this, there is a corresponding gap in some of the munin stats but no clues there either. Some detail follows, but it's not very illuminating.

There were around 180 hits recorded from the Guardian article yesterday and around 80 from the Alternet article -- usually Sunday isn't very busy, perhaps this is related, but this wasn't a massive spike in traffic so it shouldn't have caused this effect.

Email Alerts

These are the email alerts I got:

Date: Sun, 16 Jun 2013 22:03:50 +0100 (BST) Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 39.16 Time: Sun Jun 16 22:03:49 2013 +0100 1 Min Load Avg: 86.33 5 Min Load Avg: 39.16 15 Min Load Avg: 15.39 Running/Total Processes: 88/417

Date: Sun, 16 Jun 2013 22:05:15 +0100

Subject: puffin.transitionnetwork.org Munin Alert

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

CRITICALs: load is 30.48 (outside range [:8]).

Date: Sun, 16 Jun 2013 22:07:07 +0100 Subject: ** PROBLEM Service Alert: puffin/HTTP is CRITICAL ** ***** Nagios ***** Notification Type: PROBLEM Service: HTTP Host: puffin Address: puffin.webarch.net State: CRITICAL Date/Time: Sun Jun 16 22:07:07 BST 2013 Additional Info: Connection refused

Date: Sun, 16 Jun 2013 22:08:04 +0100 Subject: DOWN alert: www.transitionnetwork.org (www.transitionnetwork.org) is DOWN PingdomAlert DOWN: www.transitionnetwork.org (www.transitionnetwork.org) is down since 16/06/2013 22:03:57.

Date: Sun, 16 Jun 2013 22:10:15 +0100

Subject: puffin.transitionnetwork.org Munin Alert

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

CRITICALs: load is 11.31 (outside range [:8]).

And then it came back up:

Date: Sun, 16 Jun 2013 22:12:01 +0100 Subject: UP alert: www.transitionnetwork.org (www.transitionnetwork.org) is UP PingdomAlert UP: www.transitionnetwork.org (www.transitionnetwork.org) is UP again at 16/06/2013 22:11:57, after 8m of downtime.

Date: Sun, 16 Jun 2013 22:12:07 +0100 Subject: ** RECOVERY Service Alert: puffin/HTTP is OK ** ***** Nagios ***** Notification Type: RECOVERY Service: HTTP Host: puffin Address: puffin.webarch.net State: OK Date/Time: Sun Jun 16 22:12:07 BST 2013 Additional Info: HTTP OK: HTTP/1.1 200 OK - 692 bytes in 0.006 second response time

Date: Sun, 16 Jun 2013 22:15:14 +0100

Subject: puffin.transitionnetwork.org Munin Alert

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

WARNINGs: load is 4.44 (outside range [:4]).

Date: Sun, 16 Jun 2013 22:20:17 +0100

Subject: puffin.transitionnetwork.org Munin Alert

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

OKs: load is 1.91.

Log Entries

There are these in the the php log:

php53-fpm-error.log.1:[16-Jun-2013 17:22:32] WARNING: [pool www] server reached pm.max_children setting (42), consider raising it php53-fpm-error.log.1:[16-Jun-2013 22:02:55] WARNING: [pool www] server reached pm.max_children setting (42), consider raising it

But I can't find anything much else to indicate what happened.

Settings Changed

I realise that this isn't the answer but have further tweaked these values in /etc/mysql/my.cnf, they were set at 40:

max_connections = 50 max_user_connections = 50

And mysql was restarted.

And /opt/local/etc/php53-fpm.conf was edited:

process.max = 60 pm.max_children = 50

And php53-fpm restarted.

comment:37 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.3

- Total Hours changed from 7.93 to 8.23

The site want down again yesterday for about 8 mins mins at 3:30pm. The load peaked at 44.

Email Alerts

These are the emails I got as it went down:

Date: Mon, 17 Jun 2013 15:00:50 +0100

Subject: puffin.transitionnetwork.org Munin Alert

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

WARNINGs: load is 4.44 (outside range [:4]).

Date: Mon, 17 Jun 2013 15:05:15 +0100

Subject: puffin.transitionnetwork.org Munin Alert

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

OKs: load is 2.20.

Date: Mon, 17 Jun 2013 15:28:30 +0100 (BST) Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 8.00 Time: Mon Jun 17 15:26:55 2013 +0100 1 Min Load Avg: 25.40 5 Min Load Avg: 8.00 15 Min Load Avg: 3.40 Running/Total Processes: 47/331

Date: Mon, 17 Jun 2013 15:30:41 +0100

Subject: puffin.transitionnetwork.org Munin Alert

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

CRITICALs: load is 44.16 (outside range [:8]).

Date: Mon, 17 Jun 2013 15:31:07 +0100 Subject: ** PROBLEM Service Alert: puffin/HTTP is CRITICAL ** ***** Nagios ***** Notification Type: PROBLEM Service: HTTP Host: puffin Address: puffin.webarch.net State: CRITICAL Date/Time: Mon Jun 17 15:31:07 BST 2013 Additional Info: Connection refused

Date: Mon, 17 Jun 2013 15:34:07 +0100 Subject: DOWN alert: www.transitionnetwork.org (www.transitionnetwork.org) is DOWN PingdomAlert DOWN: www.transitionnetwork.org (www.transitionnetwork.org) is down since 17/06/2013 15:29:57.

Date: Mon, 17 Jun 2013 15:35:13 +0100

Subject: puffin.transitionnetwork.org Munin Alert

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

CRITICALs: load is 17.69 (outside range [:8]).

And then it recovered:

Date: Mon, 17 Jun 2013 15:39:05 +0100 Subject: UP alert: www.transitionnetwork.org (www.transitionnetwork.org) is UP PingdomAlert UP: www.transitionnetwork.org (www.transitionnetwork.org) is UP again at 17/06/2013 15:38:57, after 9m of downtime.

Date: Mon, 17 Jun 2013 15:40:18 +0100

Subject: puffin.transitionnetwork.org Munin Alert

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

WARNINGs: load is 6.71 (outside range [:4]).

Date: Mon, 17 Jun 2013 15:41:07 +0100 Subject: ** RECOVERY Service Alert: puffin/HTTP is OK ** ***** Nagios ***** Notification Type: RECOVERY Service: HTTP Host: puffin Address: puffin.webarch.net State: OK Date/Time: Mon Jun 17 15:41:07 BST 2013 Additional Info: HTTP OK: HTTP/1.1 200 OK - 692 bytes in 0.004 second response time

Date: Mon, 17 Jun 2013 15:45:15 +0100

Subject: puffin.transitionnetwork.org Munin Alert

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

OKs: load is 2.80.

Log Entries

There are a lot of these in the php-fpm error log just before the server went down:

[17-Jun-2013 15:25:40] WARNING: [pool www] child 43600, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (30.757526 sec), logging [17-Jun-2013 15:25:40] WARNING: [pool www] child 35447, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (37.827107 sec), logging [17-Jun-2013 15:25:40] WARNING: [pool www] child 29997, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (39.862649 sec), logging [17-Jun-2013 15:25:40] WARNING: [pool www] child 29468, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (35.815989 sec), logging [17-Jun-2013 15:25:40] WARNING: [pool www] child 29153, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (31.050583 sec), logging [17-Jun-2013 15:25:50] WARNING: [pool www] child 45198, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (30.144049 sec), logging [17-Jun-2013 15:25:50] WARNING: [pool www] child 35536, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (35.534339 sec), logging [17-Jun-2013 15:25:50] WARNING: [pool www] child 35173, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (36.196559 sec), logging [17-Jun-2013 15:25:50] WARNING: [pool www] child 29155, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (34.026623 sec), logging [17-Jun-2013 15:26:10] WARNING: [pool www] child 45210, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (30.262363 sec), logging [17-Jun-2013 15:26:10] WARNING: [pool www] child 45208, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (40.206509 sec), logging [17-Jun-2013 15:26:20] WARNING: [pool www] child 45241, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (36.576699 sec), logging [17-Jun-2013 15:26:20] WARNING: [pool www] child 45240, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (36.708073 sec), logging [17-Jun-2013 15:26:20] WARNING: [pool www] child 45238, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (37.866814 sec), logging [17-Jun-2013 15:26:20] WARNING: [pool www] child 45228, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (38.811486 sec), logging [17-Jun-2013 15:26:20] WARNING: [pool www] child 45217, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (39.173565 sec), logging [17-Jun-2013 15:26:20] WARNING: [pool www] child 45212, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (39.873535 sec), logging [17-Jun-2013 15:26:20] WARNING: [pool www] child 29468, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (38.069448 sec), logging [17-Jun-2013 15:26:30] WARNING: [pool www] child 45259, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (31.301978 sec), logging [17-Jun-2013 15:26:30] WARNING: [pool www] child 45257, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (31.574328 sec), logging [17-Jun-2013 15:26:30] WARNING: [pool www] child 45253, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (32.682662 sec), logging [17-Jun-2013 15:26:30] WARNING: [pool www] child 45250, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (33.273894 sec), logging [17-Jun-2013 15:26:30] WARNING: [pool www] child 45248, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (34.214245 sec), logging [17-Jun-2013 15:26:30] WARNING: [pool www] child 45246, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (36.041068 sec), logging [17-Jun-2013 15:26:30] WARNING: [pool www] child 45245, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (34.248792 sec), logging [17-Jun-2013 15:27:00] WARNING: [pool www] child 45283, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (35.404809 sec), logging [17-Jun-2013 15:27:00] WARNING: [pool www] child 45279, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (36.632685 sec), logging [17-Jun-2013 15:27:00] WARNING: [pool www] child 45258, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (38.613425 sec), logging [17-Jun-2013 15:27:00] WARNING: [pool www] child 45244, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (35.865239 sec), logging [17-Jun-2013 15:27:11] WARNING: [pool www] child 45243, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (32.187872 sec), logging [17-Jun-2013 15:27:21] WARNING: [pool www] child 45313, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (30.217324 sec), logging [17-Jun-2013 15:27:21] WARNING: [pool www] child 45292, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (37.632868 sec), logging [17-Jun-2013 15:27:21] WARNING: [pool www] child 45289, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (39.336141 sec), logging [17-Jun-2013 15:27:21] WARNING: [pool www] child 45263, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (30.367704 sec), logging [17-Jun-2013 15:27:51] WARNING: [pool www] child 45324, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (31.800232 sec), logging [17-Jun-2013 15:27:51] WARNING: [pool www] child 45317, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "HEAD /index.php") executing too slow (32.487625 sec), logging [17-Jun-2013 15:27:51] WARNING: [pool www] child 45277, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (34.859741 sec), logging [17-Jun-2013 15:28:11] WARNING: [pool www] child 45337, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (31.740100 sec), logging [17-Jun-2013 15:28:11] WARNING: [pool www] child 45336, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (35.493527 sec), logging [17-Jun-2013 15:28:11] WARNING: [pool www] child 45321, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (39.162045 sec), logging [17-Jun-2013 15:28:21] WARNING: [pool www] child 45342, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (39.866735 sec), logging [17-Jun-2013 15:28:31] WARNING: [pool www] child 45353, script '/data/disk/tn/static/transition-network-d6-p005/index.php' (request: "GET /index.php") executing too slow (39.079867 sec), logging

And a lot of these in the daemon.log:

Jun 17 15:29:54 puffin mysqld: 130617 15:29:54 [Warning] Aborted connection 18018 to db: 'masterpuffinwe_0' user: 'masterpuffinwe_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18055 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18024 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18028 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18025 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18057 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18039 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18026 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18019 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18022 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18062 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18043 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18011 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18040 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18036 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18021 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18030 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18044 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18045 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18047 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18046 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18033 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:55 puffin mysqld: 130617 15:29:55 [Warning] Aborted connection 18069 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:29:58 puffin mysqld: 130617 15:29:58 [Warning] Aborted connection 18010 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:14 puffin mysqld: 130617 15:30:13 [Warning] Aborted connection 18075 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:14 puffin mysqld: 130617 15:30:14 [Warning] Aborted connection 18058 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:17 puffin mysqld: 130617 15:30:17 [Warning] Aborted connection 18023 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18067 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18037 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18029 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18035 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18038 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18054 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18032 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18017 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18064 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18034 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18060 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18063 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18065 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18050 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18013 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18016 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18041 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18027 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18009 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18008 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error) Jun 17 15:30:22 puffin mysqld: 130617 15:30:22 [Warning] Aborted connection 18020 to db: 'transitionnetw_0' user: 'transitionnetw_0' host: 'localhost' (Unknown error)

But there are also other times with lots of the above mysql errors which haven't resulted in the server going down -- between June 16 at 5pm and June 18th at 9am there are 328:

grep "Aborted connection " daemon.log | wc -l 328

I'm afraid I still don't know what is causing these outages.

comment:38 Changed 3 years ago by chris

- Add Hours to Ticket changed from 0.0 to 0.3

- Total Hours changed from 8.23 to 8.53

It just happened again, at 10am, the load peaked at around 30 and the site went down for around 5 mins.

Email Alerts

Date: Tue, 18 Jun 2013 10:01:11 +0100 (BST) Subject: lfd on puffin.webarch.net: High 5 minute load average alert - 8.93 Time: Tue Jun 18 10:01:11 2013 +0100 1 Min Load Avg: 30.61 5 Min Load Avg: 8.93 15 Min Load Avg: 3.45 Running/Total Processes: 45/371

Date: Tue, 18 Jun 2013 10:05:07 +0100 Subject: ** PROBLEM Service Alert: puffin/HTTP is CRITICAL ** ***** Nagios ***** Notification Type: PROBLEM Service: HTTP Host: puffin Address: puffin.webarch.net State: CRITICAL Date/Time: Tue Jun 18 10:05:07 BST 2013 Additional Info: Connection refused

Date: Tue, 18 Jun 2013 10:05:18 +0100

Subject: puffin.transitionnetwork.org Munin Alert

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

CRITICALs: load is 14.37 (outside range [:8]).

Date: Tue, 18 Jun 2013 10:06:04 +0100 Subject: DOWN alert: www.transitionnetwork.org (www.transitionnetwork.org) is DOWN PingdomAlert DOWN: www.transitionnetwork.org (www.transitionnetwork.org) is down since 18/06/2013 10:01:57.

And Pingdom reported it back up after 6 mins down:

Date: Tue, 18 Jun 2013 10:08:03 +0100 Subject: UP alert: www.transitionnetwork.org (www.transitionnetwork.org) is UP PingdomAlert UP: www.transitionnetwork.org (www.transitionnetwork.org) is UP again at 18/06/2013 10:07:59, after 6m of downtime.

Date: Tue, 18 Jun 2013 10:10:07 +0100 Subject: ** RECOVERY Service Alert: puffin/HTTP is OK ** ***** Nagios ***** Notification Type: RECOVERY Service: HTTP Host: puffin Address: puffin.webarch.net State: OK Date/Time: Tue Jun 18 10:10:07 BST 2013 Additional Info: HTTP OK: HTTP/1.1 200 OK - 692 bytes in 0.004 second response time

Date: Tue, 18 Jun 2013 10:10:32 +0100

Subject: puffin.transitionnetwork.org Munin Alert

transitionnetwork.org :: puffin.transitionnetwork.org :: Load average

WARNINGs: load is 5.98 (outside range [:4]).

Log Entries

daemon.log: